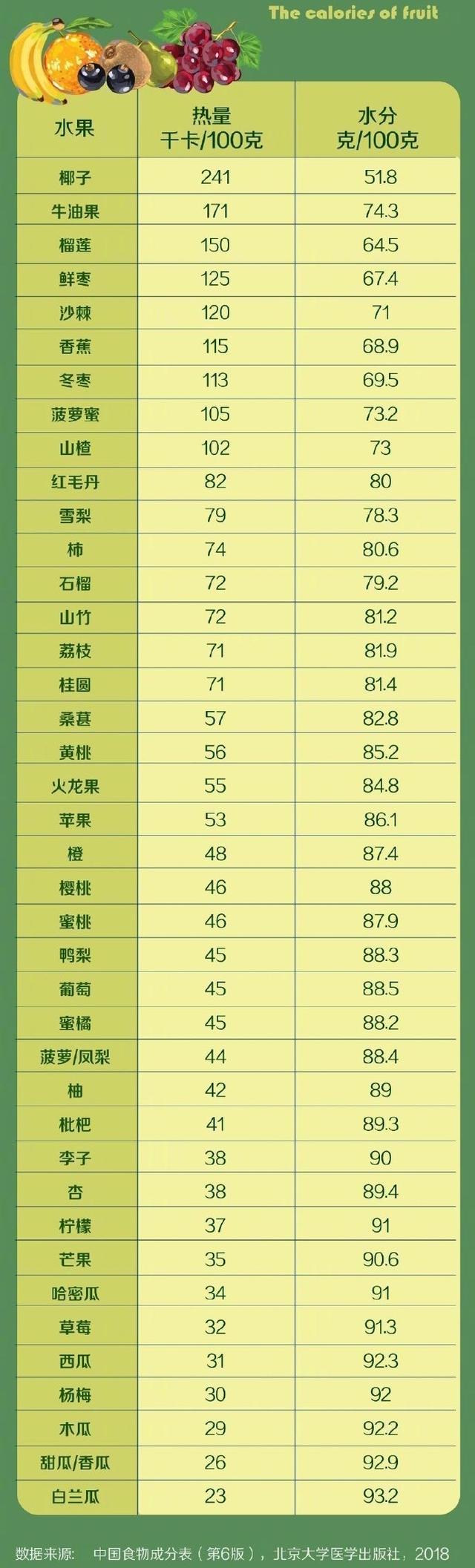

Unsweetened fruits also have low sugar content? Not necessarily!

Since November 2022, ChatGPT, a chat robot developed by American artificial intelligence research company OpenAI, has quickly become the fastest-growing consumer-grade application in history, attracting widespread attention. The emergence of ChatGPT has become the tipping point of the development of artificial intelligence, which has promoted the competition of scientific and technological innovation in various countries to enter a new track. The leap of technology will inevitably lead to in-depth observation in application scenarios. No matter how smart artificial intelligence services become, adapting to and meeting the needs of human development is always the fundamental direction. Facing the future, discussing ChatGPT’s important influence on people’s mode of production, lifestyle, way of thinking, behavior mode, values, industrial revolution and academic research will help us to use and manage this technology correctly and then think about the development prospect of artificial intelligence.

Hegel mentioned the concept of bubble burst in the Ethical System, which meant that the process of destruction was like an expanding bubble bursting into countless tiny water droplets. If we look at the development of artificial intelligence technology with this concept, we will find that it is more consistent. After the artificial intelligence bubble burst in 1956, it became many tiny water droplets and splashed everywhere. There are AlphaGo and so on in chess; There are AlphaFold and so on in scientific research; Language dialogue includes LaMDA, ChatGPT, etc. Image generation includes Discord, Midjourney and so on. These technologies have gradually converged into a force, which has involved mankind in an era of intelligent generation.

ChatGPT: generating and embedding

Generation constitutes the first feature of ChatGPT, which means innovation, but this is questioned. Chomsky believes that ChatGPT discovers rules from massive data, and then connects the data according to the rules to form similar content written by people, and thinks that ChatGPT is a plagiarism tool. This view is somewhat inaccurate. In the process of ChatGPT generation, something new is produced. However, this is not new in the sense of existence, that is to say, it does not produce new objects, but finds unseen objects from old things through attention mechanism. In this sense, it belongs to the new in the sense of attention. In 2017, a paper entitled "Attention is All You Need" proposed transformer based on the concept of attention, and later ChatGPT used this algorithm. This technology uses self-attention, multi-head-attention and other mechanisms to ensure the emergence of new content. Moreover, ChatGPT may also generate text by reasoning, and the results can not be summarized by plagiarism.

Embedding constitutes the second feature of ChatGPT, and we can regard the embedding process as enriching some form of content. The development of intelligent technology is divorced from the track of traditional technology development. Traditional technology is often regarded as a single technical article, and its development presents a linear evolution model. However, the development of intelligent technology gradually shows embeddability. For example, as a platform, smart phones can be embedded with many apps. ChatGPT can be embedded in search engines and various applications (such as various word processing software). This kind of embedding can obviously improve the ability of agents. This is the basis of ChatGPT enhancement effect. According to Statista’s statistics, as of January 2023, OpenAI has been closely integrated with science and technology, education, commerce, manufacturing and other industries, and the trend of technology embedding is becoming increasingly obvious. The degree of embedding affects the friendliness of the robot. At present, ChatGPT can’t be embedded in the robot as a sound program. In our contact, it is more like a pen pal. In the future, companion robots and talking robots may be more important, such as voice communication, human talking, machine listening and responding.

ChatGPT’s black box status

For ChatGPT, transparency is a big problem. From a technical point of view, opacity stems from the unexplained problem of technology. Therefore, technical experts attach great importance to the interpretability of ChatGPT, and they also have a headache about the black box effect of neural network. In terms of operation mode, the operation of ChatGPT itself is difficult to explain. Stuart Russell clearly pointed out that we don’t know the working principle and mechanism of ChatGPT. Moreover, he doesn’t think that the large-scale language model brings us closer to real intelligence, and the interpretability of the algorithm constitutes a bottleneck problem. In order to solve this problem, they can observe the mechanism of neural network and touch the underlying logic through some technical methods such as reverse engineering. And through the mechanical interpretable method, the results are displayed in its visual and interactive form. With the help of these methods, they opened the black box of neural network. However, the interpretability obtained by this method is only effective for professional and technical personnel.

From a philosophical point of view, the emergence of black box is related to terminology. Difficult and obscure terms will affect the acquisition of theoretical transparency. For example, the theoretical concepts on which ChatGPT algorithm depends need to be clarified. In the article "Attention is All You Need", attention mechanism is a common method, which includes self-attention and multi-attention If these concepts are not effectively clarified, it will be difficult for outsiders to understand, and the black box will still not be opened. Therefore, one of the most basic problems is to clarify attention itself. However, this task is far from complete. Ethical problems caused by lack of transparency will bring about a crisis of trust. If the principle of ChatGPT is difficult to understand, its output will become a problem. In the end, this defect will affect our trust in technology and even lose confidence in technology.

Enhancement effect of ChatGPT

ChatGPT is an intelligent enhancement technology. What it can do is to intelligently generate all kinds of texts. For example, generate an outline of data ethics and generate the research status of a frontier issue. This obviously enhances the search ability and enables people to obtain higher efficiency in a short time. This enhancement is based on generativeness and embeddedness. From the generative point of view, it realizes the discovery of brand-new objects through the transformation of attention; In terms of embeddedness, it greatly improves the function realization of the original agent.

As an intelligent technology, ChatGPT can obviously improve the work efficiency of human beings. This brings out a basic problem: the relationship between human beings and agents. We divide intelligence into substantive intelligence and relational intelligence. Entity intelligence, that is, the intelligence possessed by entities, such as human intelligence, animal intelligence and entity robot intelligence; Relational intelligence is mainly used to describe the relationship between human beings and agents, and augmented intelligence is the main form of relational intelligence. It is necessary to purify the enhanced intelligence, make it show the general significance of people and technology through philosophical treatment, and make it have normative significance through moral treatment.

However, ChatGPT, which can enhance the effect, will cause some ethical problems. The first is the problem of intelligence gap. At present, this technology is limited, and there is a certain technical threshold, which will lead to the widening gap among users, that is, the gap caused by intelligent technology. This is the gap and gap arising from the acquisition of technology. The second is the issue of social equity. Unless this technology can be as popular as mobile phones, this fairness problem will be exposed very significantly. People who can use ChatGPT to work are likely to improve their efficiency significantly; Those who can’t use this technology will keep their efficiency at the original level. The third is the problem of dependence. Users will feel the convenience of this technology during use. For example, it can quickly generate a curriculum outline, write a literature review, and search for key information. This will make users gradually rely on this technology. But this dependence will have more serious consequences. Taking searching literature as an example, with the help of this technology, we can quickly find relevant literature and write a decent summary text. Although ChatGPT can quickly generate a literature review, it has lost the academic training of related abilities, so the result may be that researchers and students have lost their abilities in this field.

The relationship between ChatGPT and human beings

In the face of the rapid offensive of ChatGPT, academic circles generally take a defensive stance, especially many universities have banned the use of this technology in homework and thesis writing. However, prohibition is not the best way to deal with it. Technology is like water, which can be infiltrated in many ways, so relatively speaking, rational guidance is more appropriate.

To guide rationally, we need to consider the relationship between agents and human beings. I prefer to compare the relationship model between the two to "make the finishing point". Taking the generation of text outline as an example, ChatGPT can generate a data ethics outline based on data processing links around related ethical issues in data processing, such as collection, storage and use. In a narrow sense, this outline is appropriate and can reflect some aspects of ethical issues in data processing. However, from a broad point of view, this outline is too narrow, especially only from the data processing itself to understand data, without considering other aspects, such as dataization, data and lifestyle. What we can do or want to do is to make the finishing touch on the generated text and make it "live" through adjustment. In this way, the position of intelligently generated text has also begun to be clear: it is the finishing touch of human beings that plays a key role in the generation. Without this pen, the intelligently generated text is just a text without soul. If not, it will be difficult to guarantee the meaning and value of human beings, and the corresponding ethical problems will also arise.

"Big data" is a data set with a particularly large volume and data categories, and such data sets cannot be captured, managed and processed by traditional database tools. "Big data" first refers to data volumes? Large refers to a large data set, usually in 10TB? However, in practical application, many enterprise users put multiple data sets together, which has formed PB-level data volume; Secondly, it refers to the large variety of data, which comes from a variety of data sources, and the types and formats of data are increasingly rich. It has broken through the previously defined structured data category, including semi-structured and unstructured data. Secondly, the speed of data processing is fast, and the real-time processing of data can be achieved even when the amount of data is huge. The last feature is the high authenticity of data. With the interest of new data sources such as social data, enterprise content, transaction and application data, the limitations of traditional data sources have been broken, and enterprises increasingly need effective information power to ensure their authenticity and security.

Data collection: ETL tools are responsible for extracting data from distributed and heterogeneous data sources, such as relational data and flat data files, into the temporary middle layer, cleaning, converting and integrating them, and finally loading them into data warehouses or data marts, which become the basis of online analysis and data mining.

Access to data: relational database, NOSQL, SQL, etc.

Infrastructure: Cloud storage, distributed file storage, etc.

Data processing: NLP (NaturalLanguageProcessing) is a subject that studies the language problems of human-computer interaction. The key to natural language processing is to make computers "understand" natural language, so natural language processing is also called NLU (NaturalLanguage Understanding), also known as Computational Linguistics. On the one hand, it is a branch of language information processing; on the other hand, it is one of the core topics of artificial intelligence.

Statistics: hypothesis test, significance test, variance analysis, correlation analysis, t-test, variance analysis, chi-square analysis, partial correlation analysis, distance analysis, regression analysis, simple regression analysis, multiple regression analysis, stepwise regression, regression prediction and residual analysis, ridge regression, logistic regression analysis, curve estimation, factor analysis, cluster analysis, principal component analysis, factor analysis, fast clustering method and clustering method

Data mining: Classification, Estimation, Prediction, affinity grouping or association rules, Clustering, Description and Visualization, complex data type mining (Text, Web, graphics, video, audio, etc.)

Prediction: prediction model, machine learning, modeling and simulation.

Results: Cloud computing, tag cloud, diagram, etc.

To understand the concept of big data, we should first start with "big", which refers to the data scale. Big data generally refers to the amount of data above 10TB(1TB=1024GB). Big data is different from massive data in the past, and its basic characteristics can be summarized by four V’s (Vol-ume, Variety, Value and Veloc-ity), namely, large volume, diversity, low value density and high speed.

First, the data volume is huge. From TB level to PB level.

Secondly, there are many types of data, such as weblogs, videos, pictures, geographical location information, and so on.

Third, the value density is low. Take video as an example. During continuous monitoring, the data that may be useful is only one or two seconds.

Fourthly, the processing speed is fast. 1 second law. This last point is also fundamentally different from the traditional data mining technology. Internet of Things, cloud computing, mobile Internet, Internet of Vehicles, mobile phones, tablets, PCs, and various sensors all over the globe are all data sources or ways of carrying them.

Instructors

Amoy Technology Department | Leisure Fish Technology | Cloud

"Fully strengthening the flutter fluidity, sharing challenges, online monitoring tool construction, optimization means to precipitate in component containers, and finally optimized advice."

Zhang Yunlong (cloud from), idle fish client experts.Since Netease, byte, Ali is running. At the current Department of Alibaba, there are currently responsible for idle fish APP packages, fluidity, start-up equation experience.

Outline

This sharing revolves around FLUTTER fluidity, respectively: 1.Flutter fluidity optimization challenge; 2. List container and flutterdx component optimization; 3. Performance measurement and devTool extension; 4.Fltter sliding curve optimization; 5. Performance optimization suggestions.

FLUTTER fluency optimization challenge

Business complexity challenge

FLUTTER has always been known by everyone, and the list controls displayed by Flutter Gallery (shown in the left) is indeed very smooth. But the actual business scene (shown on the right) is more complex than the Gallery list demo:

Same card, more and complex (such as rounded) view control;

When the list scroll, there are more view logic, such as scrolling control of other controls and disappearing;

Card controls, there are more business logic, such as a different label, activity price, etc. based on background data, and there is also common business logic, etc.

Because idle fish is an e-commerce app, we need to have certain dynamic capabilities to deal with frequently changed activities. Here we use the Flutter Dynamicx components of Ali to implement our dynamic capabilities.

Framework challenge

Let’s look at the overall flow of the list, here only pay attention to the free scroll phase after the finger is released.

When the finger is released, the initial speed is calculated based on ScrollDragController.end;

UI Thread requests RequestFrame to Platform Thread, and calls BegInframe to UI Thread at Platform Thread.

The UI Thread Animate phase trigger list slides a little distance while registering the next frame callback to Platform Thread;

Ui Thread Build Widget, generate / update the renderObject tree through the three tree DIFF algorithm of Flutter;

UI Thread RenderObject Tree Layout, Paint Generates an Scene object, and finally passed to Raster Thread to draw on-screen;

The above flow must be completed in 16.6 ms to ensure that the frame cannot be guaranteed. Most of the cases, there is no need to build a new card, but when the new card enters the list area, the entire calculation amount will become huge, especially in complex business scenes, how to ensure all calculations within one frame of 16.6ms, Is a small challenge.

The figure above is a sliding devTool sample, and the Carton stage occurs when the new card is on the screen, and the other phases are very smooth, because the scrolling speed is attenuated, so the carton interval is also getting bigger. Because most of the time is very smooth, the average FPS is not low. However, the new card is built at the time of production, which gives us a stylish body feeling.

Challenge of dynamic capabilities – Flutter Dynamicx

The free fish APP card uses the self-developed Flutter Dynamicx to support our dynamic capabilities. Basic Principle: Online Edit Layout DSL, generate DX files and send it. The end side generates the DXComponentWidget by parsing the DX file and combines the back card data, and finally generates Widget Tree. FLUTTER DYNAMICX technology brings dynamic update capabilities, unified monitoring capabilities (such as dxcomponentwidget monitoring cards), good research and development insecurity (online DSL and Android Layout, and optimize Android), online editing capabilities;

But in performance, we also pay a certain price: DX cards add time to the template loading and data binding overhead, Widget wants to recursively create through WidgetNode traverses dynamically, and the view nesting layer will be deeper (followed by later).

Description: Flutter Dynamicx Reference Ali Group DSL Rules Realization

User’s sense of physical challenge

I have already described above, and the card in the FLUTTER list is more obvious.

When Android RecycleView occurs, the physical feel is not obvious, and the FLUTTER list has occurred when the card occurs, not only the time pause, but also a hopping on the OFFSET, and the physical feeling of small card is also changed. It is obvious;

Suppose the list content is simple enough, scrolling does not happen, we also found that the Flutter list and Android RecycleView are not the same:

? Use ClampingscrollPhysics to feel the feeling of similar magnets when the list is stopped.

? Use BOUNCINGSCROLLLPHYSICS, the list is started, and the speed attenuation is faster;

On the 90Hz machine, the early flutter list is not smooth, the reason is that the touch sampling rate is 120 Hz, and the screen refresh rate is 90Hz, causing partial screens to be 2 touch events, part is a 1 touch event, last Resulting in rolling OFFSET effects. When the Flutter 1.22 version, RESAMPLINGENABLED can be used to re-sample the touch event.

List container and flutterdx component optimization

Telling the challenge of Flutter fluidity optimization, now share how you optimize the smoothness and precipitate into PowerScrollView and Flutter Dynamic components.

PowerScrollView design and performance optimization

PowerscrollView is a snarefish team’s self-developing Flutter list assembly, with better packages and supplements on the Sliver Agreement: Data increased deletion, complement local refresh; layout, supplemented the waterfall flow; incident, supplement the card on the screen , Away, scrolling events; control, support for scrolling to Index.

In terms of performance, the waterfall flow layout optimization, local refresh optimization, card division optimization, and sliding curve optimization.

PowerScrollView Waterfall Flow Layout

PowerScrollView Waterfall Flow Layout provides longitudinal layout, lateral layout, mixed arrangement (transverse card and ordinary card mix). Nowadays, most of the listings of the hiped fish are available in PowerScrollView’s waterfall flow layout, such as the home page, search results page, etc.

PowerScrollView Waterfall Flow Layout Optimization

First, through conventional cache optimization, cache each card upper corner X value and which column belonging.

Compared to the Slivergrid card into the list area, the waterfall flow layout, we need to define Page, card admission to create and leave the field destruction need to be units. Before optimization, Page calculates cards in a screen visual area, and in order to determine the starting point Y value of Page, the primary layout needs to calculate the Page N and N + 1 two pages, so the amount of cards involved in the layout calculation is much lower, and the performance is low. After optimization, the approximation of all card height averages calculates Page, which greatly reduces the number of participating in the layout card, and the number of cards destroyed by Page also becomes less.

After the column cache and paging optimization, use the idle fish Self-developing Benchmark tool (follow-up) to compare the waterfall flow and GridView, view the number of frames and the worst frame consumption, can find that performance performance is basically consistent.

PowerScrollView local refresh optimization

Leisure fish products expect users to browse products more smooth, will not be loaded by loadmore, so the list is required to trigger LoadMore during scrolling. FLUTTER SLIVERLIST When the LOADMORE supplement card data, the List control is tender, and the slterlist building will destroy all cards and recreate it, and the performance data can be imagined very bad. PowerScrollView provides a layout refresh optimization: all cards on the cache screen, no longer recreate, ui thread Optimize from the original 34MS to 6MS (see the lower left picture), the right image is viewed by Timeline, the depth and complexity of the view built Optimize.

PowerScrollView card fragmentation optimization

The second figure 2 card is the early search results page of the idle fish, and it is not a waterfall flow. To view the Timeline chart when the card is created (adding DX Widget creation and PerformLayout overhead), you can find that the complexity of the card creation is extremely large. On the normal mid-range machine, the UI Thread consumes more than 30ms, to be optimized to 16.6ms It is very difficult to use routine optimization. For this purpose, two cards can be disassembled, and each frame is used to render.

Directly see the source code, the basic idea is to mark the card widget, when the card is true, the right card first _BuildPlaceHoldercell builds the Widget (empty Container), and register the next frame. In the next frame, the right card is modified with NeedShowRealcell for True, and self-laminate, and then build real content.

Is it delayed to build a true content of the card, will it affect the display content? Because the FLUTTER list has a cacheextends area on the visual area, this part of the area is not visible. In most scenarios, users don’t see the scene of the blank card.

Also using the FLUTTER BENCHMARK tool to perform performance test, you can see 90 points before and after the card division, 99 packet consumption has a significant downgrade, and the number of lost frames is also reduced from 39 to 27.

Note Here, when listening to the next frame, you need widgetsbinding.instance.scheduleframe to trigger the RequestFrame. Because when the list is displayed, it is possible because there is no callback from the next frame, resulting in the task of the delay display queue, eventually makes the first screen content display is incorrect.

Delayed framing optimization ideas and suggestions

Comparison of Flutter and H5 design:

DART and JS are single-threaded models that need to be sequenced and deserialized across threads;

Flutter Widget is similar to H5 VDOM, there is a DIFF process.

Early Facebook In React Optimization, the Fiber Architecture is proposed: Based on the VDOM Tree’s Parent Node → Sub-node → Brothers Node → Sub-node, the VDOM Tree is converted to the Fiber data structure (chain structure), and the reconcile phase is implemented. Interrupt recovery; based on the Fiber data structure, the control section continues in the next frame.

Based on React Fiber thinking, we propose its own delayed framing optimization, not just left and right card size, further, render content disassembled as the current frame task, high-excellent delay task and low delay tasks, the upper screen priority is sequentially changed Low. Where the current frame task is the left and right white Container; the high-optovel delay task is exclusively frame, where the picture portion also uses Container placeholders; in the idle fish scene, we dismantled all DX image widget from the card, as low as low Excellent delay tasks and is set to no more than 10 in one frame consumption.

By disassembling the 1 frame display task to 4 frames, the highest UI on the high-end machine will be optimized from 18 ms to 8 ms.

Description 1: Different business scenes, high-yogle and low-probing task settings have different description 2: Slide on the low-end machine (such as Vivo Y67), the sub-frame scheme will let the user see the list whitening and content Upable process

FLUTTER-DYNAMICX Component Optimization – Principle Explanation

Edit the "Class Android Layout DSL", compile the binary DX file. The end side is downloaded, loaded, and resolved, and the WidgetNode Tree is generated. See the right figure.

After the business data issued in the background, the Widget Tree is generated by recursively traversing WidgetNode Tree, and finally appears.

Description: Flutter Dynamicx Reference Ali Group DSL Rules Realization

FLUTTER-DYNAMICX Component Optimization – Cache Optimization

I know the principle, it is easy to discover the flow in the red box in the picture: binary (template) file parsing load, data binding, Widget dynamic creation has certain overhead. To avoid repeated overhead, we have cached DXWIDGETNODE and DXWIDGET, and the blue selection code shows the Widget cache.

FLUTTER-DYNAMICX Component Optimization – Independence ISOLATE Optimization

In addition, the above logic is placed in a stand-alone ISOLATE, and the maximum amount is lowered to the lowest. After the line technology grayscale AB experiment, the average carton bad frame ratio is reduced from 2.21% to 1.79%.

FLUTTER-DYNAMICX Component Optimization – Hierarchical Optimization

FLUTTER DYNAMICX provides class Android Layout DSL, adds Decoration layers to implement each control Padding, Margin, Corner, adds the Decoration layer; to implement the DXContainerRender layer. Every layer has its own clear duty, the code level is clear. However, since the increase in 2 layers caused the Widget Tree hierarchy, the DIFF logic of 3 trees became complicated and the performance becomes low. To do this, we merge the Decoration layer and the DXContainerRender layer, see the middle Timeline diagram, which can be found that the optimized flame grading and complexity becomes low. After the line technology grayscale AB experiment, the average carton bad frame ratio is reduced from 2.11% to 1.93%.

Performance measurement and devtool extension

Tell the optimization tool, which is described here to make a measure of how to measure, and the build / extension of the tool.

Offline scene – Flutter Benchmark

Offline scene – Flutter Benchmark When the FLUTTER is detected, the calculation consumption on the UI Thread and Raster Thread is required. So the Flutter optimizes before and after comparison, using the time consuming data of the UI Thread and Raster Thread of each frame.

When the FLUTTER is detected, the calculation consumption on the UI Thread and Raster Thread is required. So the Flutter optimizes before and after comparison, using the time consuming data of the UI Thread and Raster Thread of each frame.

In addition, the fluency performance value is affected by the operating gesture, the scrolling speed, so the error based on the measurement results of manual operations will have errors. Here, use the WidgetController control list control FLING.

The tool provides the interval between the scrolling speed, the number of scrolls, the scroll, and the like. After the scrolling test is completed, the data is displayed by the UI and Raster Thread frame, 50 points, 90 points, and 99-positioned frame consumption, and give performance data from a variety of dimensions.

Offline scenario – Based on the recording screen

Flutter Benchmark gives multi-dimensional measurement data at the Flutter page, but sometimes we need a horizontal comparison competition app, so we need to have a tool transverse to more different technologies. The idle fish is self-developed in the Android side to self-developed the recording screen data. Imagine the mobile phone interface into multiple screens, get the screen data (byte arrays) (byte arrays) by sending VirtualDisplay, interval 16.6 ms, using the Hash value of the byte array represents the current picture, the current 2 The Hash-read hash value is unchanged, and the Carton is considered.

In order to ensure that the fluency detecting tool app itself does not have a carton, it is read, which is compressed, and the compression ratio on the low-end machine is higher.

Through the detection of the tool without invading, a rolling test can be detected, the average FPS value (57), the frame distribution is variance (7.28), 1S time, the large number of large cards (0.306), large card cumulative time (27.919). Intermediate array display frame distribution: 371 represents the number of normal frames, 6 generations 16.62ms of small cardon quantity, 1 generation 16.63MS quantity.

Here is the definition of the big Carton: Carton, greater than 16.6 * 2 ms.

Offline Scene – Performance Detection Based on DEVTOOL

In addition, the scenes of the idle fish are also extended DevTool. In a Timeline map extended time-consuming, greater than 16.6ms red highlight, convenient development.

Online scene-Flutter high available detection FPS implementation principle

Online scene, idle fish self-developed Flutter high available. The basic principle is based on 2 events:

Ui.window.onbeginframe event

Engine notifies the VYSNC signal arrival, notify UI Thread to start preparing the next frame building

Trigger schedulerbinding.handlebeginframe callback

Ui.window.ondrawframe event

Engine Notification UI Thread Start Draw Next Frame

Trigger schedulerbinding.handledrawframe callback

Here we have recorded a frame start event before the Handlebeginframe processing, and the end of the frame is recorded after HandledrawFrame. Each frame here needs to calculate the list control offset value, and the specific code implementation is implemented. When the entire accumulated exceeds 1, executes a calculation, filtering out the scene without scrolling, calculates the FPS value using each frame.

Online Scene – FlutterBlockcanary Line Stack Stack Detection

After using Flutter high available to get the online FPS value, how to locate the stack information, you need to collect stack information. Free fish collects carton stacks using the self-developed Flutterblockcanary. The basic principle is that the signal is transmitted in the C layer, such as 5ms once, each signal receives the Dart Ui Thread stack collection, the resulting series of stacks are aggregated, and the same stacks in a row are considered to have occurred in Carton, this This stack is the stack of Carton we want.

The following figure is the stack information collected by Flutterblockcanary, and the middle framefpsRecorder.getscrolloffset is a Carton call.

Online scene – FlutterBlockcanary Detects overreservation

In addition, FlutterBlockcanary also integrates over-rendering detection capabilities. Replace the Buildowner object by replying widgetsflutterbinding, replacing the buildowner object, and rewrive the ScheduleBuildFor method to intercept Element. Based on the dirty ELEMENT node, extract the depth of the dirty node, the number of direct child nodes, the number of all child nodes.

Based on the number of all child nodes, in the idle fish details page, we are positioned to the "Quick Question View" during scrolling, and the number of transes and all child nodes are too large. View the code, positioning the view hierarchical level, by sinking the view to the leaves node, the number of stasible Build nodes is optimized from 255 to 43.

FLUTTER sliding curve optimization

The front told Tarton optimization means and measures and standards are mainly surrounded by FPS. But from the user’s physical feel, we found that Flutter also has many optimal points.

FLUTTER list slide curve and native curve

FLUTTER list slide curve and native curve

Compare the scroll curve of OFFSET / TIME, you can find that the Flutter BouncingScrollsimulation and iOS scroll curve are close, Clampingscrollsimulation and RecyClerView are close. Check the Flutter source code, it is true.

Because BouncingScrollsimulation has rebound, many pull-down refreshes and load more features are based on BOUNCINGSCROLLSIMULATION package, which causes the Flutter page sliding, physical and native Android pages inconsistent.

Flutter list performance and optimization under fast sliding

Although the Clampingscrollsimulation slides and Android RecyclerView is close, but in the quick sliding scenario, you can find that the flutter list scrolls quickly stops, and quickly slides. For the reason, you can see the moment that the sliding curve is stopped, and the speed is not a decline, and it will speed up, finally reach the end point, and stop. Based on the source code formula, the curve can be discovered that flutter clampingscrollsimulation is approximated by the Formula Fitting Method to approximate the Android RecyclerView Curve. In the case of rapid sliding, the focus of the formula curve is not 1 corresponding value, but the right image is broken, the speed will become fast.

It can be understood that the FLUTTER’s formula fit is not ideal. In the near future, there is also a PR proposed using DART to implement the RecyclerView curve.

Flutter list performance and optimization in the case of Carton

The first chapter is mentioned in the case of the same FPS, such as the FPS 55, the native list feels smooth, and the styles of the FLUTTER list are more obvious. One reason here is that the native list usually has multiple thread operations, and there is a lower probability of the big Carton; the other reason is that the same small carton’s body, FLUTTER has obvious statter, and the native list can’t feel. So why?

When we build cards, we deliberately create small Carton, compare the flutter list and RecyclerView before and after, and you can find that RecyclerView Offset does not hop, and the Flutter curve has a lot of burrs, because Flutter scrolling is based on D / T curve calculation, When a carton occurs, △ t doubles, and OFFSET also trips. It is also because of time pause and offset jump, let users know that the Flutter list is not unstoppable in small Carton.

By modifying the Y=D (T) formula, in the case of Carton, ΔT-16.6ms will ensure that the small Carton case is not hopped. In the case of Great Carton, it is not necessary to reset the ΔT to 16.6ms, because in the parking time, it has been clearly allowed to give the user to feel the carton, OFFSET does not have a trip only to make the list rolling distance short.

Performance optimization

Finally share some suggestions for performance optimization.

In optimization, we should pay more attention to the user’s body, not only the performance value. The upper right map is visible, even if the FPS value is the same, but the taste occurs, the body feels clearly; the bottom of 2 game recording screens, the left side average 40 fps, the average of 30 fps, but the body feels is more smooth .

Not only should I pay attention to the performance of UI Thread, but also pay attention to the overhead of Raster Thread, such as the characteristics / operation of Save Layer, but also causing Carton.

In terms of tool, it is recommended to use different tools in different scenarios. It should be noted that the problem of tool detection is a stable reproduction problem or the occasion of data jitter. In addition, it is also necessary to consider the performance overhead of the tool itself, and the tool itself needs to be as low as possible.

In terms of optimization ideas, we must broaden the direction. Most optimized ideas of Flutter are optimized computing tasks; and multithreading direction is not, refer to the independent ISOLATE Optimization of Flutter Dynamicx; in addition, it is difficult to digestive tasks for one frame Whether it is possible to disassemble multiple frame time, try to make a card per frame, priority to the user.

Finally, I recommend paying attention to the Flutter community. The Flutter community continues to have a variety of optimization, regularly upgraded Flutter or dimensions, CHERRY-PICK optimization submission, is a good choice.

Performance analysis tool usage suggestions

Flutter tool, the first push is the official devtools tool, the Timeline and CPU Flammatic maps can help us discover problems well; in addition, Flutter also provides a wealth of Debug Flags to assist our positioning problems, familiar with each Debug switch Role, I believe that there will be no homage to our daily research and development; in addition to official tools, performance logs are also good auxiliary information, as shown in the lower right corner, the idle fish Fish-Redux component outputs the task overhead in the scroll, can It is convenient to see that at that moment.

Performance analysis tools themselves

Performance testing tools inevitably have certain overhead, but must be controlled within an acceptable range, especially on the line. A case in front sharing the FLUTTERBLOCKCANARY detection tool, discovers the framefpsRecorder.getscrolloffset time consumption, and the logic is just that Flutter is highly available to scroll offset. See the right front source code of the right picture, each frame needs to be recursively traversed to collect RenderViewPortBase, which is a small overhead. Finally, we avoid the repetition calculations during the scroll through the cache optimization.

Carton optimization suggestions

Reference official documents and excellent performance articles, precipitated a lot of routine optimization methods in the UI and GPU side, such as refreshing the minimum widget, using itemextent, recommended using Selector and Consumer, etc., avoid unnecessary DIFF computing, layout calculation, etc. Reduce SAVELAYER, replace half-transparent effects using images, alleviate the overhead of the Raster thread.

Because of the reasons, only part of the sequence, more common optimization suggestions see the official documentation.

Use the latest Flutter Engine

As mentioned earlier, the Flutter community is also active, Framework and Engine layers have an optimized PR income, which mostly can make the business layer without perception, and better optimize performance from the bottom viewing angle.

Here, there is a typical optimization scheme: existing flutter solution: When each VSYNC signal arrives, it triggers the build operation. At the end of Build, start register the next vsync callback. In the case where a carton does not occur, see Figure Normal. However, in the case of carton, see Figure Actual Results, just over 16.6ms here, because it is a registration listening to the next vsync callback, triggered the next build, for this, a large amount of time in the middle. Obviously, what we expect is, at the end, immediately execute, assuming enough to execute enough, this time the screen is still smooth.

If the team allows, it is recommended to upgrade the flutter version regularly; or maintain your own Flutter independent branch is also a good choice. From the community Cherry-Pick optimization, you can guarantee that business stability can also enjoy the community contribution. In short, I recommend you to pay attention to the community.

Summarize

In summary, the challenges, monitoring tools, optimization methods, and recommendations are shared by Flutter fluidity optimization. Performance optimization should be people-centered, develop monitoring indicators and optimization points from actual physical fitness; fluency optimization is not one, the above share is not all, there are many optimized means to pay attention: How to better multiplex Element, how to avoid Platform Thread busy leading to vsync signal lacking, etc., is a point that can be concerned. Only the continuous technical enthusiasm and conscious spirit can optimize the APP performance to the ultimate; technical teams also have access to open source communities, other teams / companies to connect, That stone stone, Can be attacked.