Special feature of 1905 film network To welcome 2023, we are full of expectations for a better start!In previous years, the editorial department of 1905 Film Network will provide a special annual domestic film list for everyone, and look forward to China films in the new year.

Like 2021 (200! Please collect this list of the strongest films in China in 2022.), we comprehensively sorted out 200 new domestic films worth looking forward to in 2023, including some films from last year’s film list, because they were not released in 2022, as well as information channels such as project filing and group news release.

Before accepting this luxury gift of 200 new films, we specially selected and introduced 50 key films in no particular order. What do you want to see? Read on, remember to collect the complete film list at the end of the article, participate in message interaction and get a movie gift package.

In 2023, 1905 Movie Network will accompany you to watch movies, review movies and love movies forever!

The Wandering Earth II

Director:

Starring:// / Sha Yi / Ning Li / Wang Zhi / Zhu Yanmanzi

Release date: January 22 (New Year’s Day)

Dare to confirm the file one year in advance, has already become the "confidence" of the 2023 Spring Festival file. And whether it is the gorgeous lineup of official announcements in succession, or the director Frant Gwo’s self-exposure of "overspending and burning" funds again, it is enough for us to have infinite expectations for the sequel of "Little Broken Ball".

What kind of China science fiction can be seen in Wandering Earth 2, from the "milestone" that once turned the tables against the wind to the "new starting point" that is now widely expected? Is it the PK sun that exploded even more after Jupiter was lit? Or is the doomsday cosmic war more romantic in Chinese style than fleeing with the earth? The secret of waiting for 4 years was revealed in the cinema on the first day of the New Year’s Day.

Man Jiang Hong

Director:

Starring:///Wang Jiayi / Yun-peng Yue

Release time: January 22 (New Year’s Day)

Later, Zhang Yimou entered the Spring Festival for the second time.

The cast is strong, and Shen Teng and Jackson Yee form a double male host; Starring Zhang Yi, Lei Jiayin and Sniper, etc. re-entered the image world of Zhang Yimou; Yun-peng Yue,,,,, and Zhang Chi were assembled in the column for the first time; There is the new "girl" Wang Jiayi, which makes people curious.

The film tells the story of four years after Yue Fei’s death in Shaoxing, Southern Song Dynasty, when Qin Gui led his troops to talk with Xu Jin. On the eve of the talks, the envoy of the State of Jin died in the prime minister’s residence, and the secret message he carried was also missing. A soldier and the deputy commander of the pro-barracks were caught in a huge conspiracy by chance.

According to reports, this is a suspense film with multiple reversals. The story is unfolded in an ancient county compound, and there are comedy elements. What kind of breakthroughs and surprises will Zhang Yimou bring?

Bear haunt, accompany me "bear core"

Director:/Shao Heqi

Release date: January 22 (New Year’s Day)

This year’s Golden Rooster Award won the Best Art Film Award. As a domestic animation brand that has been stationed in the Spring Festival file for N consecutive years, the series "Bear Haunted" continues to bring the latest "Bear Haunted with Me" Bear Core "in 2023, opening a new chapter in this classic IP.

Xiong Da and Xiong Er, two brothers who have been acting as cute girls in the movie, will finally uncover the mystery of their life experiences that they have hidden in their hearts for many years — — Where did the mother who left the two bears go after a forest fire? Can they reunite with Mama Bear with the help of their good friend Logger Vick?

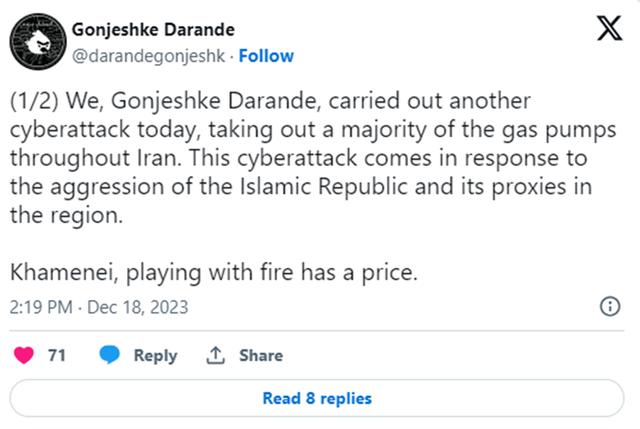

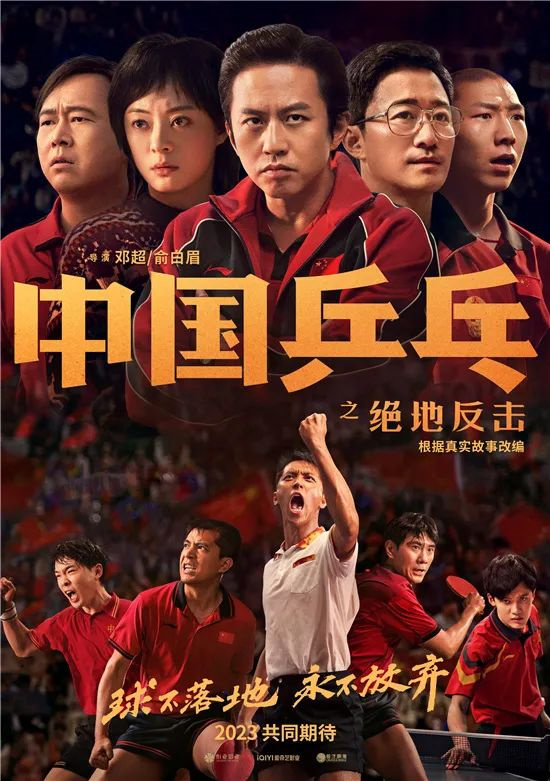

China Ping-Pong: The Jedi Fight Back

Director:/

Starring Deng Chao///

This is another masterpiece of domestic sports movies.

At present, China is leading the world in men’s table tennis, while the film focuses on the true story of China’s men’s table tennis in the early 1990s. It tells the story of Cai Zhenhua, then head coach of men’s table tennis, leading Ma Wenge, Wang Tao, Ding Song, Liu Guoliang and Kong Linghui to fight back in the Jedi World Table Tennis Championships in Tianjin in 1995.

The history of men’s table tennis from setbacks to returning to the peak will surely make the film have a very bloody counterattack and inspirational color. Deng Chao directed and performed again, playing Cai Zhenhua, the head coach, and Ying Huang, the wife of Cai, played by Sun Li, adding warmth to the story outside the burning arena, and Jason Wu also joined in the performance.

Lost in the Stars

Director:/

Starring:///

The producer has great ambitions, and has pushed the domestic suspense masterpiece in the series. As the closing film of the 4th Hainan Island International Film Festival, he has met with the first audience and won the award of "Brain-burning Drama". At present, it is only to be officially finalized.

The film is adapted from the Soviet film A Trap for a Bachelor. It tells the story that He Fei’s wife suddenly disappeared during his wedding anniversary trip. When he searched for nothing, his wife suddenly appeared, but He Fei insisted that the woman in front of him was not his wife. With the intervention of gold medal lawyer Chen Mai, more mysteries gradually emerged.

After Zhu Yilong won the Golden Rooster Award for Best Actor, his partner NiNi went to the big screen again, expecting their roles and performances to collide with new sparks.

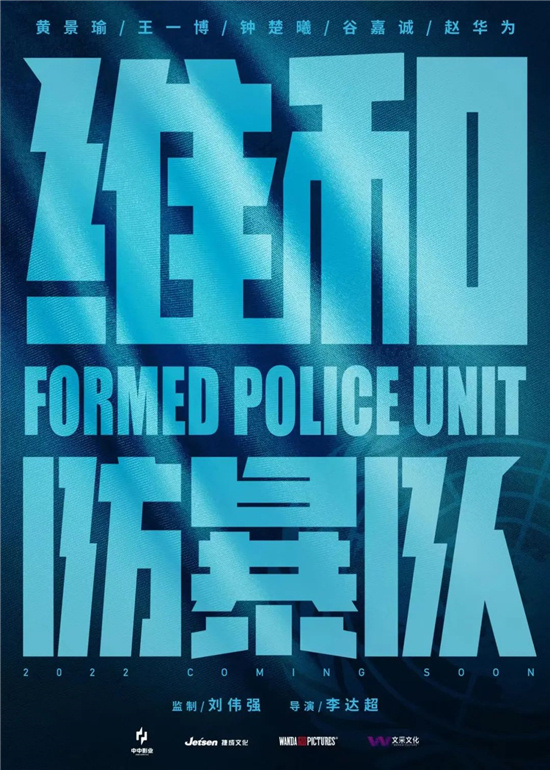

Peacekeeping riot squad

Director: Tat Chiu Lee

Starring://

A new mainstream action film that has attracted much attention since the official announcement, once again focuses on the Chinese peacekeeping police group that performs tasks overseas and maintains world peace. Zhuyu is in the front, and there is no doubt that its "invincible youth" lineup is the most interesting and winning.

Needless to say, Johnny has "served in military service" many times in film and television works, and his goal should be to surpass himself who once played Gu Shun. Originally, it was difficult to associate YiBo with the role of peacekeeping police. However, after "Ice Rain and Fire", he seems to have won the trust of more audiences to some extent.

Warmth

Director:

Starring:/YiBo

Director Dapeng is not good at street dance, but the "double Bo combination" he found is the two actors who are most suitable for street dance movies. With the foundation of previous cooperation in hip-hop competitive programs, Bo Huang and YiBo have shown a complete tacit understanding in the materials disclosed so far.

Needless to say, Bo Huang’s acting skills, the little people in adversity are almost his best interpretation; YiBo’s most likely surprise in Passion should be his love for street dance, which is highly consistent with the character trajectory that Dapeng wants to show in his works.

The story of sports theme background, the burning and blood of chasing dreams is the most important. "Enthusiasm" chose the story of "mr. six" and "Newcomer" going in two directions and turning over the adversity, expecting it to bring passion and touch to the audience.

In addition, Dapeng and YiBo each have other new films to be shown, including comedy directed by Dapeng, YiBo and co-stars, which have kept everyone waiting for a long time, hoping that 2023 will come as scheduled.

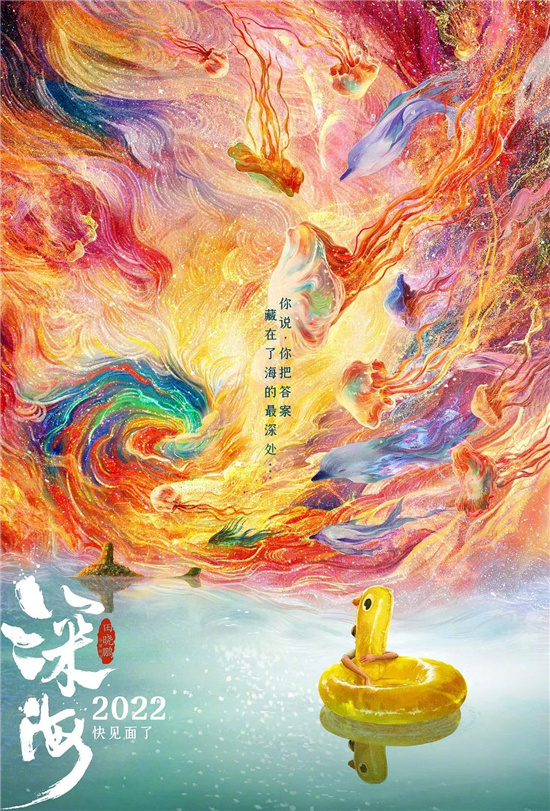

Deep sea

Director:

Judging from the exposed materials at present, our visual effect can be said to be the biggest bright spot. We creatively adopted the "particle ink painting" technology to "three-dimensionally" China ink painting, and blended hundreds of colors in visual aesthetics, which opened up a new painting style for China animated films.

Unlike director Tian Xiaopeng’s previous work, Deep Sea does not adapt the mythical theme, but focuses on the fantastic creatures in the sea and the stories of modern little people. Can this choice continue to bring traffic passwords?

Dragon and Horse Spirit

Director:

Starring://

Jackie Chan’s signature Kung Fu comedy is coming! It’s a work made by Jackie Chan on the 60th anniversary of his debut, which is of great significance. It has also contributed to Jackie Chan and Jason Wu’s two generations of kung fu filmmakers being in the same frame for the first time, playing a master-apprentice combination, which has become a major attraction.

Jackie Chan once said at the press conference that because this film depicts the group of dragon and tiger martial artists, he was moved to tears when he read the script, and he closed it before turning on the machine, reluctantly shooting, and tried his best for the action drama. Is it still a familiar smell that kung fu superstars come out of the mountain again and the stars come to help?

Exchange of life

Director:

Starring Lei Jiayin//

Director Su Lun’s last work was also a collaboration with Lei Jiayin, which made the box office over 900 million and became the dark horse of that year. After exchanging time and space, can she succeed again by replacing her personality and body this time?

As the first comedy starring later, it is obviously of great significance to Zhang Xiaofei. And Lei Jiayin, who has a sense of joy, plays a pair of enemies in the blind date bureau, and the story has made a good start. In the preview of the exposure, she also showed a completely different personality from the previous role.

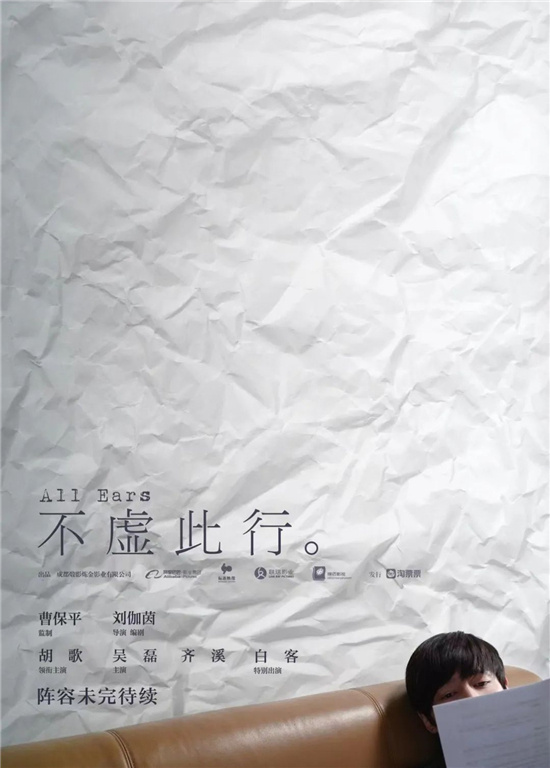

Glad you came.

Director:

Starring:///

I chose a very special and small group to cut in and lead the story. Wen Shan, played by Hugh, makes a living by writing eulogies, and imprints the flash of the deceased in the memory of the living forever. It is said that "forgetting is the real death", and this role and his story may bring us different perspectives on life.

Hugh and Leo are the second expectation besides the story of glad you came. After all, who hasn’t looked forward to a couple of Mei Changsu and feiliu after watching nirvana in fire? It is understood that in the movie, Xiao Yin, played by Leo, will be the mirror image of the role of Wen Shan. I wonder what kind of co-frame situation the director will eventually set for the two roles.

Where the Wind Blows

Director:

Starring: Tony Leung Chiu Wai/

Tony Leung Chiu Wai and Aaron Kwok are competing in the same box for the first time. Who can refuse not to watch such a combined lineup?This epic film recreates the story of detective Shuang Xiong, who dominated Hong Kong for 30 years. It has previously competed for the 95th Oscar for Best International Film on behalf of China and Hong Kong, which shows that the quality level has been guaranteed.

Biography of Qiao Feng in Tianlong Babu

Director:

Starring Donnie Yen//

Donnie Yen’s Qiao Feng is definitely the best Qiao Feng. Donnie Yen, who has multiple roles, also serves as producer and general director, can be said to completely dominate this film, just to create the "strongest warrior" in his mind.

"I’ll play as long as he wants, and I’ll keep playing." Judging from the first preview, there are many wonderful dramas by Donnie Yen, which satisfy the tastes of action movie fans, but it is also quite challenging to recreate Jin Yong’s classics. The film was first released in Malaysia on January 16th and China on January 19th. The time for meeting the mainland audience needs to be officially announced.

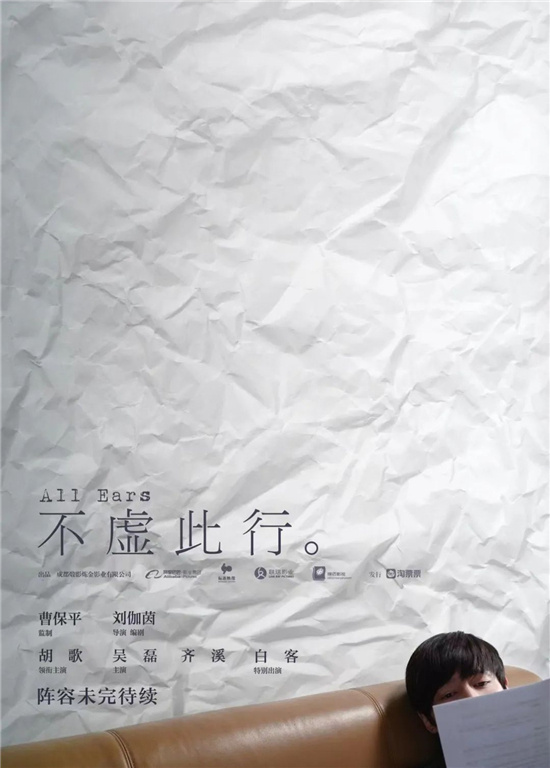

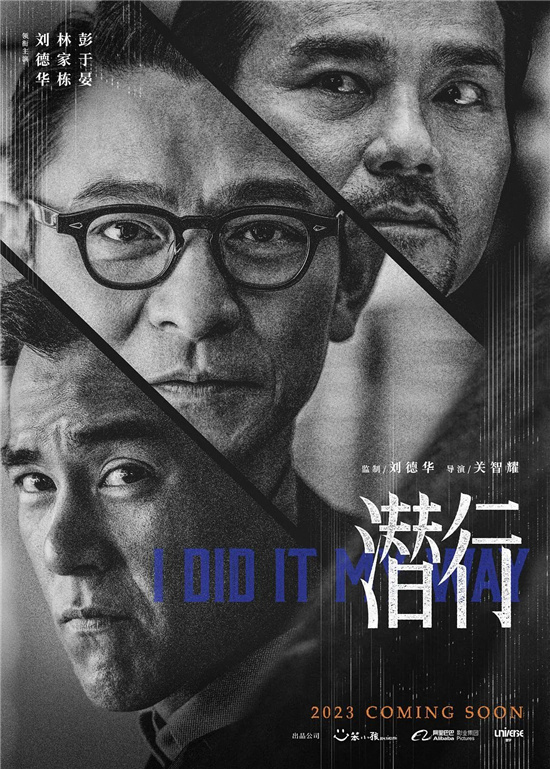

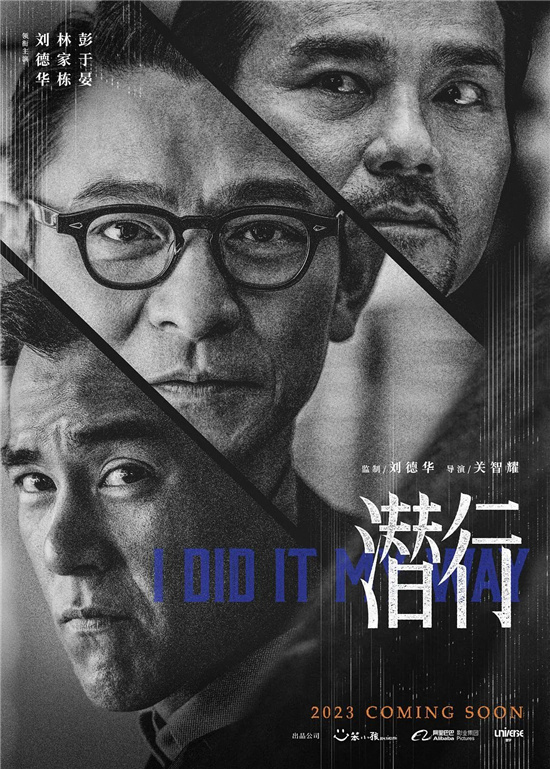

Stealth

Director:

Starring Andy Lau//

Andy Lau+Lin Jiadong+Eddie Peng Yuyan, as well as Liu Yase, the best actress in the new Hong Kong Film Awards, as well as familiar faces such as,, and, with the story theme of police undercover+drug control, the whole set of configuration is a classic paradigm of Hong Kong-made crime action movies.

Jason Kwan Chi-Yiu won two Hong Kong Film Awards for best cinematography. He also directed and filmed the series "Chasing Dragons" under the recommendation of Andy Lau. Andy Lau is the producer this time. I hope this team will bring a new look to Hong Kong gangster films.

Customs front

Director:

Starring://

Jacky Cheung returned after a six-year absence from the film industry, and Nicholas Tse served as the action director for the first time. These two leading roles alone are already very interesting.

The film focuses on customs themes and reveals the story of major transnational smuggling cases. Director Qiu Litao and screenwriter Li Min worked together to create a high-profile box office topic, and Nicholas Tse later acted in an action film, expecting that they can jointly create greater glories for Hong Kong action films.

Operation Moscow

Director: Qiu Litao

Starring:/Andy Lau/

Qiu Litao, who has always been good at big scenes, parachuted into "Moscow" this time with Andy Lau, Zhang Hanyu and others. The film is adapted from a real historical event. After the "Sino-Russian train robbery", the Chinese police crossed thousands of miles to arrest bandits and went deep into Moscow to investigate and arrest them.

In order to present the action scenes more realistically, a large number of real-life exterior shots have been built. I believe that Qiu Litao’s creative style in this type can capture the realism required by the story. The combination of Zhang Hanyu, Andy Lau and Huang Xuan has also added another guarantee to the quality and texture of the film.

Inside story

Director:

Starring: Aaron Kwok/Yam Tat-wah/Wu Zhenyu

Siu Fai Mak’s new film, a series of directors, brings together three powerful actors: Aaron Kwok, Yam Tat-wah and Wu Zhenyu. This is also the cooperation between Aaron Kwok and Wu Zhenyu after 22 years.

The film tells the story of Ma Yingfeng, a barrister, cooperating with Ke Dingbang, a detective to investigate a bizarre murder case, and at the same time exposing the layers of shady behind "charity". Aaron Kwok plays a lawyer and is also a master of Taekwondo, Wu Zhenyu plays a detective and Yam Tat-wah plays the chief financial officer. It is reported that all three of them have many action scenes in the film.

Burst point

Director: Tang Weihan

Starring://

After that, he made another action film about police and bandits. This time, he was not the director, but the producer and screenwriter, and gave it to the new director to show his skill.

Tell the story of the Chief Inspector of Anti-drug who made every effort to crack down on drug-making and drug-trafficking fanatics and brought his gang to justice, and shoot it in China, Hongkong, Malaysia and other places. Under the escort of Lin Chaoxian, this film will undoubtedly be a hardcore commercial film with fierce action style.

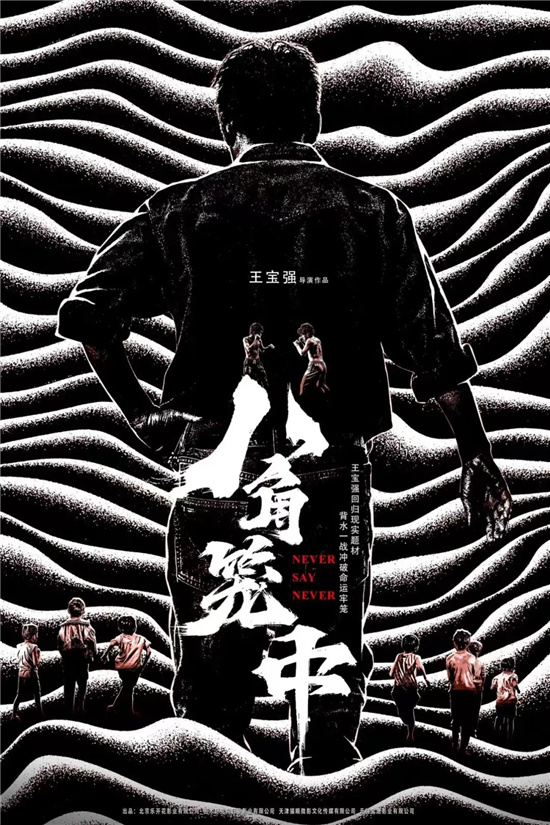

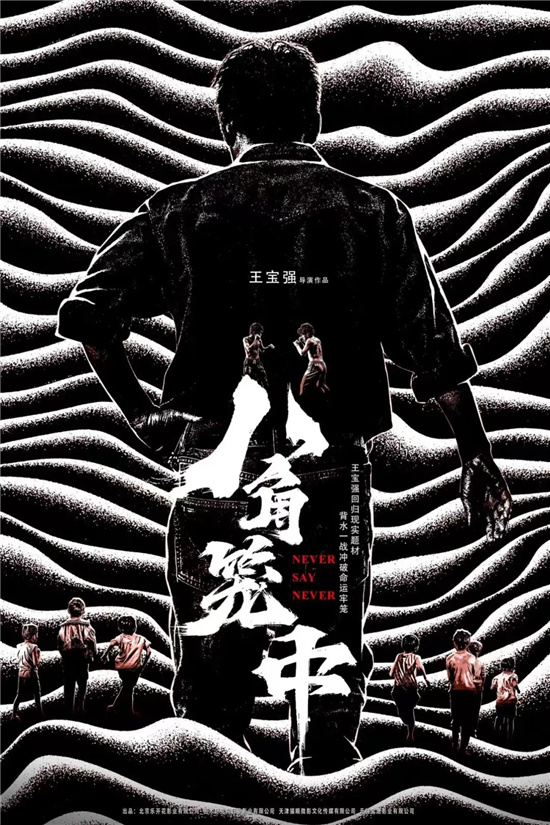

Never Say Never

Director:

Starring: Wang Baoqiang/Chen Yongsheng/

The word-of-mouth of the director’s first film is polarized, which makes Wang Baoqiang once say that he "owes the audience a good movie". The notice seems to confirm his sincerity.

A fighting coach, a group of children who love fighting, the film wants to show their inspirational trajectory of changing their fate with fists and finding a way out with sweat. Get rid of the nonsense and concentrate on reality. I hope this new film, which Wang Baoqiang polished for six years, can let the audience see the real filmmaker Wang Baoqiang.

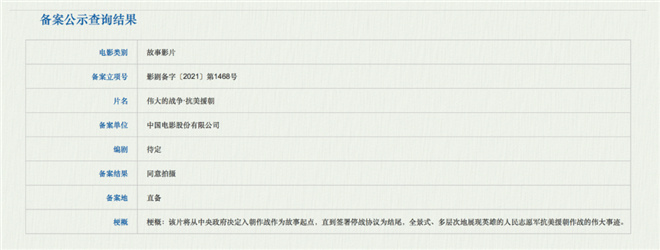

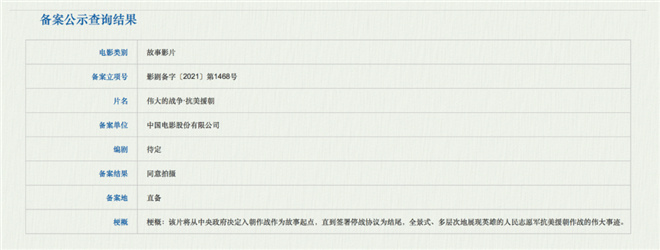

Great victory

Director:

How can Chen Kaige have a new understanding of the epic feeling of war films and the theme of resisting US aggression and aiding Korea? For Chen Kaige, this tragic and great history has brought him great inner shock and infinite feelings, and the most important part of The Great Victory is how to effectively convey these feelings and thoughts to the audience again. At present, the film’s main creation has not been officially announced, but the lineup should be worth looking forward to.

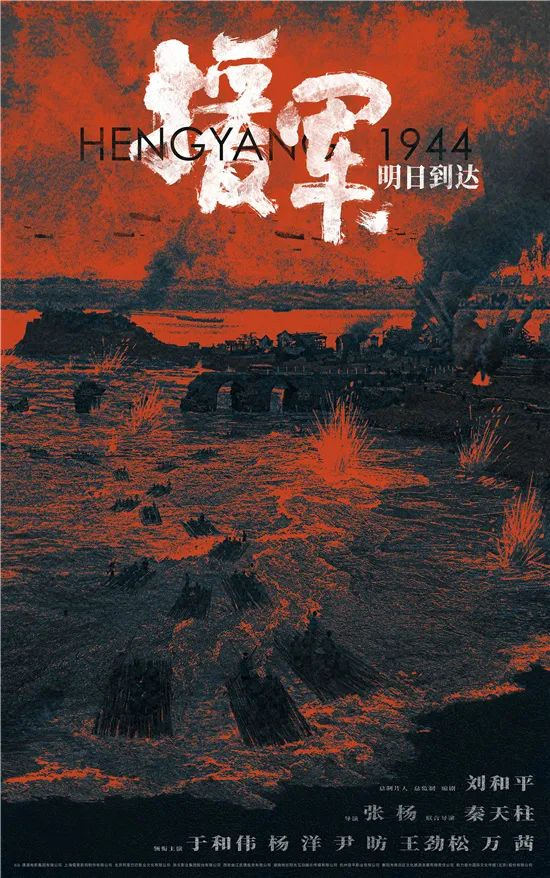

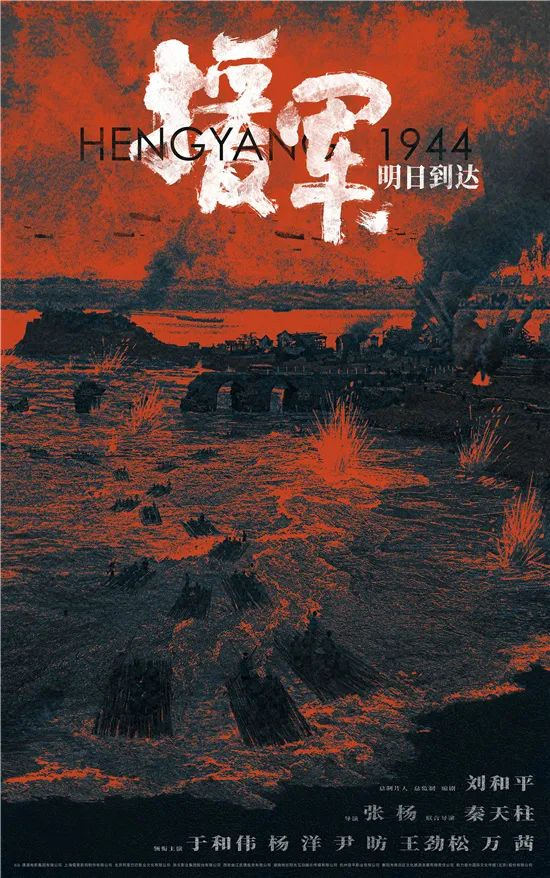

Reinforcements arrive tomorrow

Director: JASON ZHANG/Qin Tianzhu

Starring://Wang Jinsong//

Blessed are the audience who like to watch works! The gold medal screenwriter, who once created high-scoring TV series such as Yongzheng Dynasty, Daming Dynasty 1566 and No War in Beiping, will enter the film industry for the first time, not only as a script, but also as a chief producer and producer.

The film is adapted from a real historical event and begins with the story of Hengyang Defence War in 1944 — — Hengyang garrison, with less than 18 thousand troops, resisted the Japanese army’s total strength of 110 thousand for 47 days outside the city, which became a classic example in the history of World War II. This film is already in production.

Mr. red carpet

Director:

Starring: Andy Lau/Rema Sidan

In 2006, Ning Hao was completed with the support of Andy Lau, and it became an instant hit. Sixteen years later, Ning Hao and Andy Lau finally joined hands.

Just looking at the outline is very funny. Andy Lau plays an out-of-date Hong Kong star. In order to make a film with a big director again, he goes deep into the countryside to experience life, which leads to a series of farce. The protagonist’s design matches and contrasts with Andy Lau himself, and Ning Hao’s crazy absurd humor style makes people look forward to it.

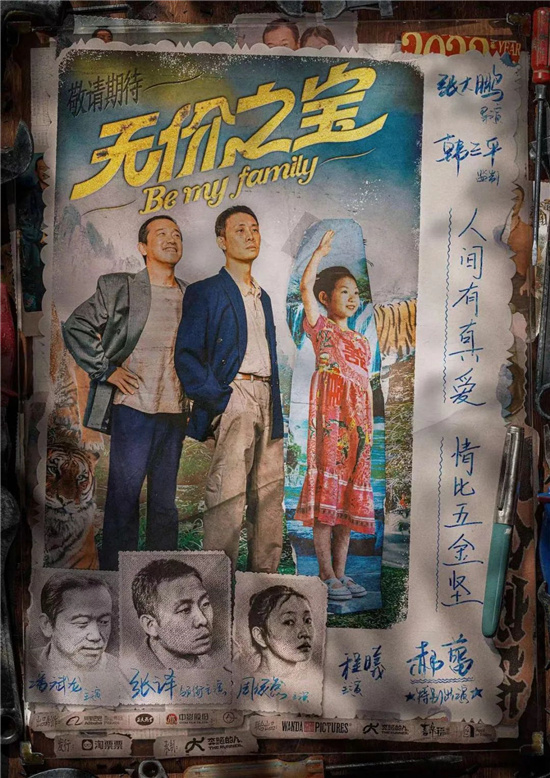

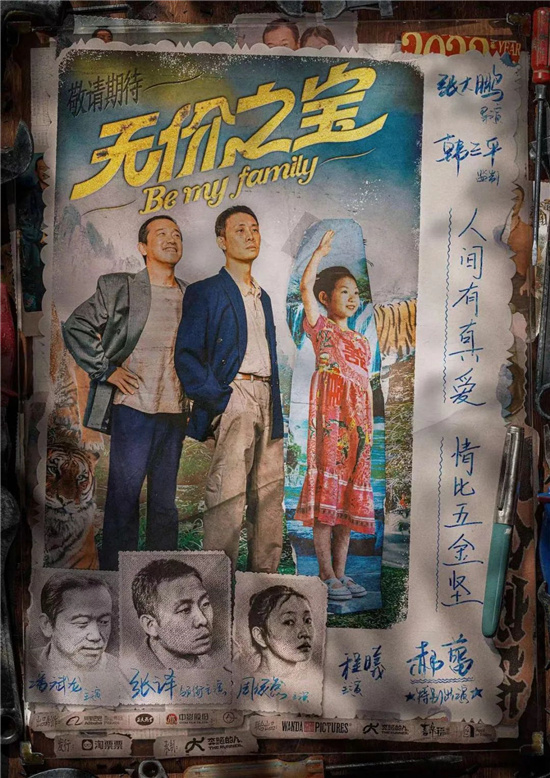

Priceless treasure

Director:

Starring: Zhang Yi/Pan Binlong/

With an advertising short film "What’s Page", he achieved phenomenal network hotspots. This time, director Zhang Dapeng successfully assembled a super lineup composed of Zhang Yi, Pan Binlong and Hao Lei in his first cinema feature film. Besides being lucky, he obviously has two brushes.

Renamed by Hardware Family, the film tells the story of a trouble that fell from the sky — — The story of temporary home, the daughter of a debtor and a pair of buddies who share joys and sorrows, is extremely "unreliable" but very "warm". Going back to the "comfort zone" of the lowlife, what kind of performance will Zhang Yi bring this time?

The Three Battalions

Director:

Starring: Zhang Yi

"Three Battalions" is another annual crime suspense film produced by Chen Sicheng after the Sheep Without A Shepherd series and "Disappeared She". Directed by director Dai Mo, the best screenwriter of the Golden Rooster Award is responsible for the script, and the behind-the-scenes lineup is strong.

Starring Zhang Yi, his screen performance has become stronger and stronger in recent years. This time, with Chen Sicheng, it’s like a dream of "Soldiers Assault". It is reported that there are also starring actors, and they have also cooperated with Zhang Yi respectively, and this time, they will continue to lead the way.

This film is adapted from the documentary literature "Please tell the director that the task of the Three Battalions has been completed". Many readers think this story is very suitable for adapting film and television works. The Three Battalions is very popular in the industry, not only in the film version, but also in the online drama version.

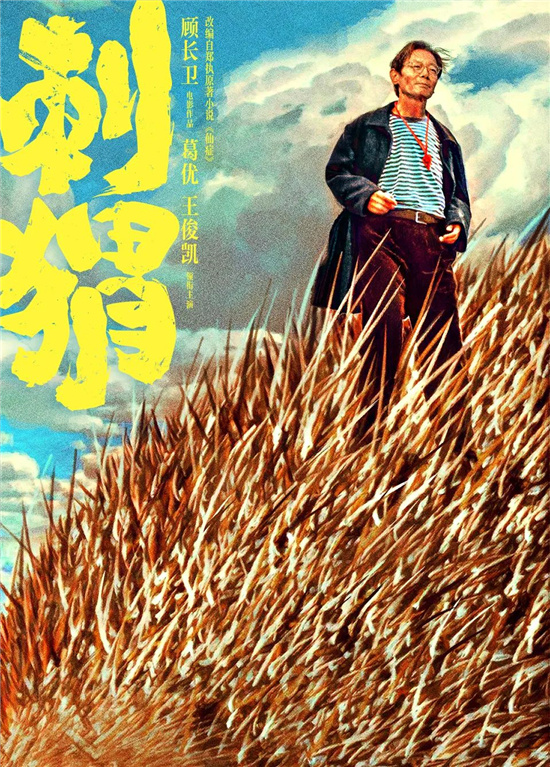

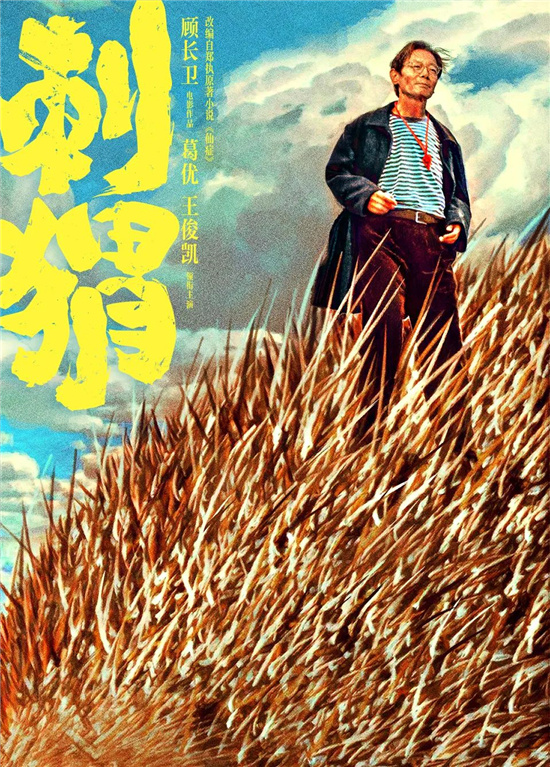

Hedgehog

Director:

Starring:/

It was Gu Changwei and Ge You who collaborated again after 30 years, which was adapted from Zheng Zhi’s novel Fairy Disease, and he also served as a screenwriter.

In the film, Ge You plays Wang Zhantuan, a mental patient, and Karry plays Zhou Zheng, a teenager who stutters with inferiority. The film tells the story of Wang Zhantuan and his nephew Zhou Zheng, two "aliens" in the secular world, who are in the same boat, with humanistic care and absurd colors. Ge You and Karry combine the old with the new, and expect Gu Changwei to produce another masterpiece of realism later.

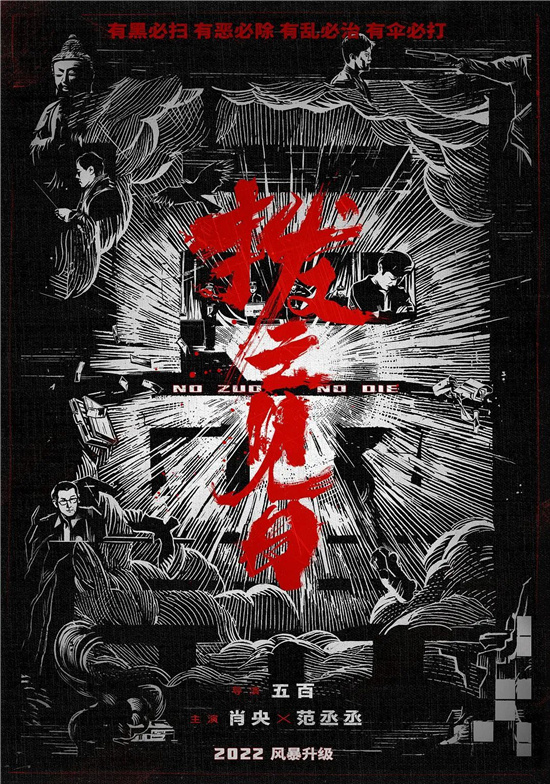

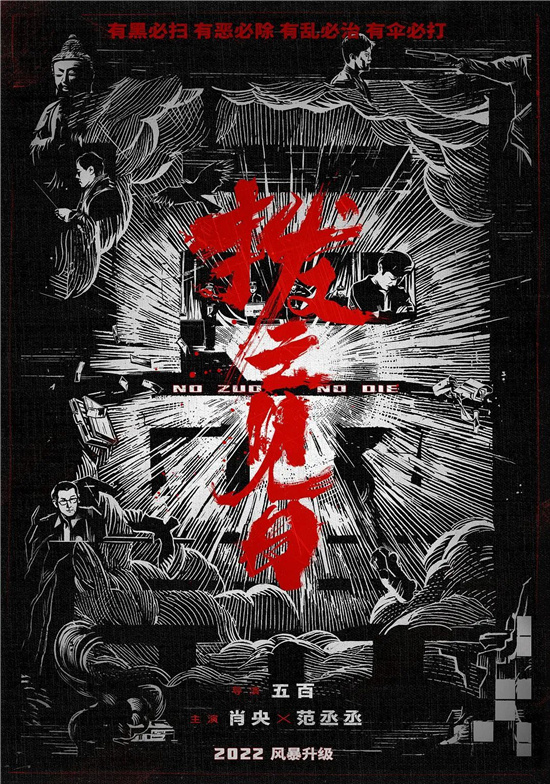

Sweep the black, clear the clouds and see the sun

Director:

Starring:/

After the director successfully launched the drama series, he turned to movies and continued to focus on the theme of eliminating evil.

The film tells the story that under the guidance of the central supervision team, the frontline police in the special struggle against evil in Kuizhou City severely punished evil and cast justice. Shawn and Adam co-starred in a pair of criminal police mentors and apprentices. Presumably, they will also try to challenge the action scenes in the duel scene of eliminating evils.

Great night

Director:

Starring Adam//

Seeing the shape of Adam, who is not a whole "flaxen": who is this "non-mainstream" and "social shake"?

In addition to modeling, The Great Night is also a rather non-mainstream existence in genre and story. It puts the elements of thriller and comedy under the lens of live broadcast and sneak shot, and follows three lame anchors to sneak into the crew to track the heroine … … Bringing into the audience’s mind, I really began to wonder how "amazing" this night would be.

Unfamiliar road to life

Director:

Starring:/Adam//

Director Xiaoxing Yi’s new comedy film will tell a funny story about his father-in-law testing his son-in-law. Qiao Shan and Mary will play a couple, Zhang Jingyi and Adam will play their daughters and prospective son-in-law, and a family of four will start a truck trip. ,,,, etc. It is reported that Adam and Chang Yuan are rivals in the film.

Save the suspect

Director:

Starring Zhang Xiaofei///

After co-directing Sniper with her father Zhang Yimou, Zhang Mo directed it alone, which is also her second independent director’s work, challenging the type of criminal suspense.

The story is centered on Chen Zhiqi, a Chinese female lawyer with a gold medal, who defends a condemned criminal and turns his conviction back, but is forced to be involved in a criminal case with many doubts. This is Zhang Xiaofei’s best actress after winning the Golden Rooster Award. Apart from "Exchange of Life", another starring work has a very different role type.

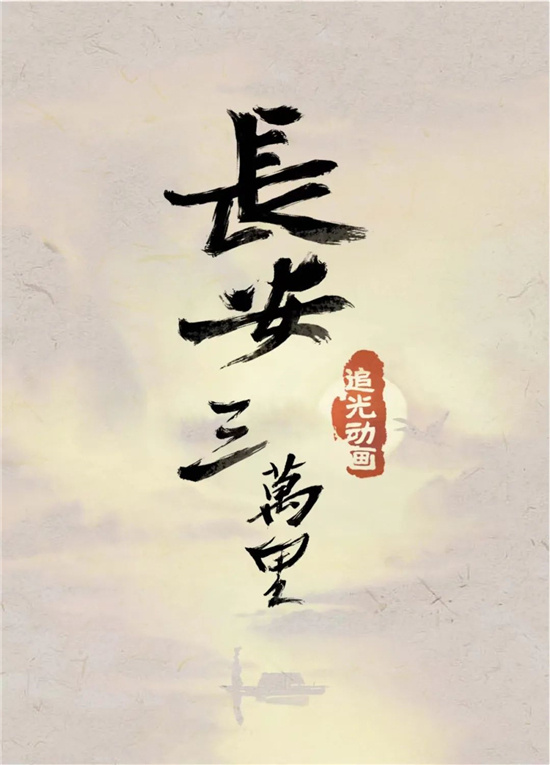

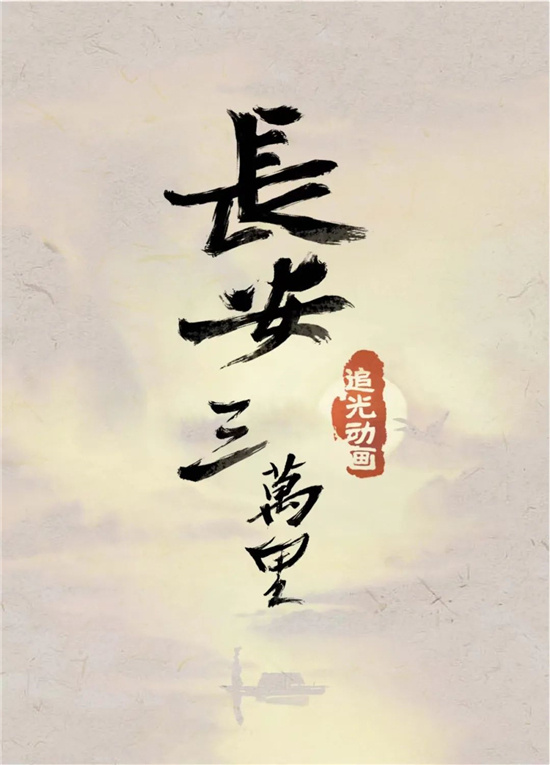

Three Wan Li in Chang ‘an

Director: Xie Junwei/Zou Jing

When the new animated film of the summer chase was released, the eggs finally appeared quietly at the end of the film. The story begins after the An Shi Rebellion broke out, and will trace back to his lifelong friendship with the poet Li Bai through the mouth of Gao Shi, an envoy of the Tang Dynasty who was trapped in the lonely city.

From the "New God List" series of two works, the creative team of light-chasing animation has fully demonstrated their unique views on classical and traditional aesthetic painting styles; In action scenes, the latest Yang Jian has fully proved its unique imagination, and Three Wan Li in Chang ‘an will become a new chapter in team animation creation.

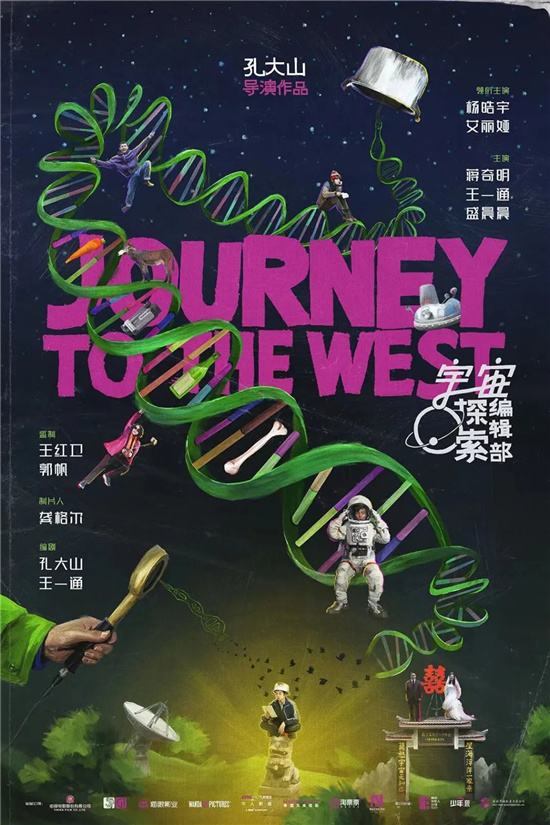

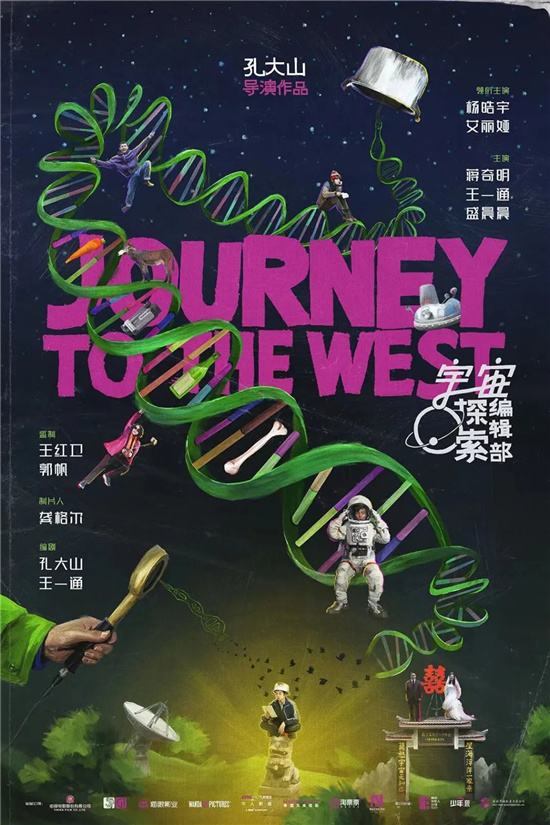

Journey to the West

Director:

Starring:/

Although the volume is far apart, under the category of "China Science Fiction", it carries the expectation that many viewers will not lose "Wandering Earth 2". After decades of searching for extraterrestrial civilization, the "editorial madman" finally received the signal one day, and opened his absurd search road with a life-long puzzle.

This work has gained great attention and discussion when it appeared in various film festivals in the past. After the milestone, Cosmic Exploration Editorial Department may use it to write a brand-new annotation for China science fiction.

Ex-4: Early Marriage

Director:

Starring:///

Five years later, all the members of "Previous 3" returned. The sequel was directed by Yu-sheng Tian, the director of the "predecessor" series, and performed by Han Geng, Ryan, Kelly and Mengxue Zeng.

This story is still about Meng Yun (Han Geng) and Yu Fei (Ryan) who are of marriageable age, but one is facing the confusion of emotional destination and the other is facing the test of "marriage cooling-off period". "Former 3" won nearly 2 billion box office, which is a big dark horse for romantic films. Will "Former 4" still have such great energy?

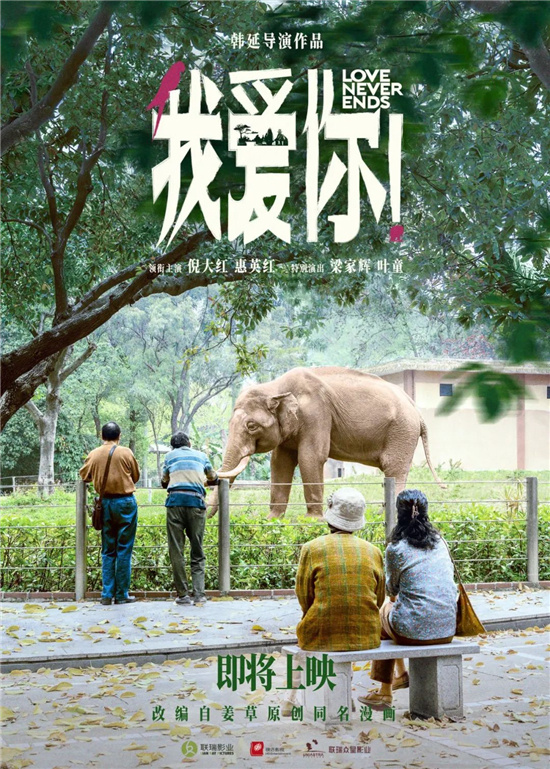

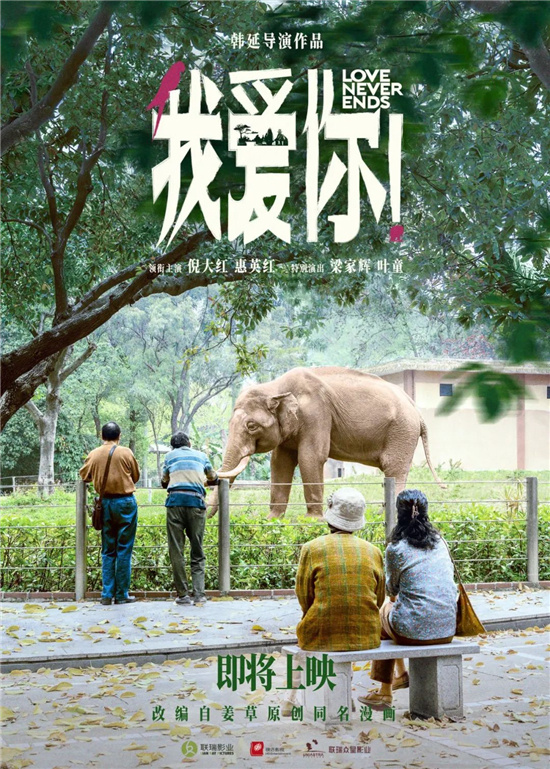

I love you! 》

Director:

Starring:/Kara Wai//

From the beginning to the end, Han Yan has obviously been very good at combing the unusual emotional clues under the brush strokes of realistic themes.

Focusing on the love of ordinary old people in their 60s, Dahong Ni, Kara Wai, Tony Leung Ka Fai and Cecilia Yip are all absolute power players. What is the romance of the elderly after watching too many young people’s "sweets"? While discussing love, how will the film relieve the theme of life? We look forward to finding the answer from two "late love" episodes.

Parrot killing

Director:

Starring://Youhao Zhang

With novel theme, inspired by the news of "Killing Pig Plate", it is also a "revenge movie for women". The story tells that Zhou Ran, who experienced the scam of "Killing Pig Plate", went to a small town in Fujian to find someone. Through a parrot, she accidentally met Lin Zhiguang and Xu Zhao, and she had an emotional contest with two mysterious men, mixing the elements of love and suspense.

This is the first feature film of the new director, and the writer is invited as the artistic director to check the quality. Zhou Dongyu and Zhang Yu teamed up for the second time after the short film "Love Alone". Can they reproduce their high-light acting skills with this film? We will wait and see.

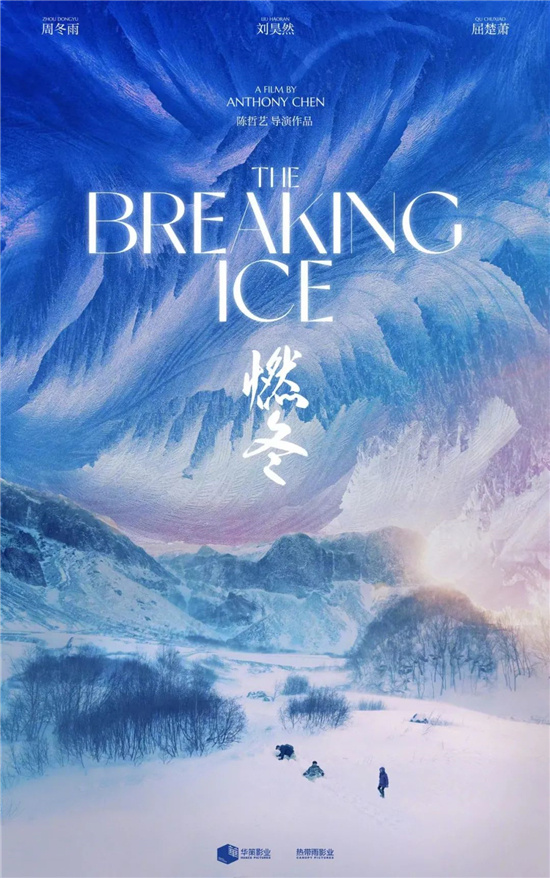

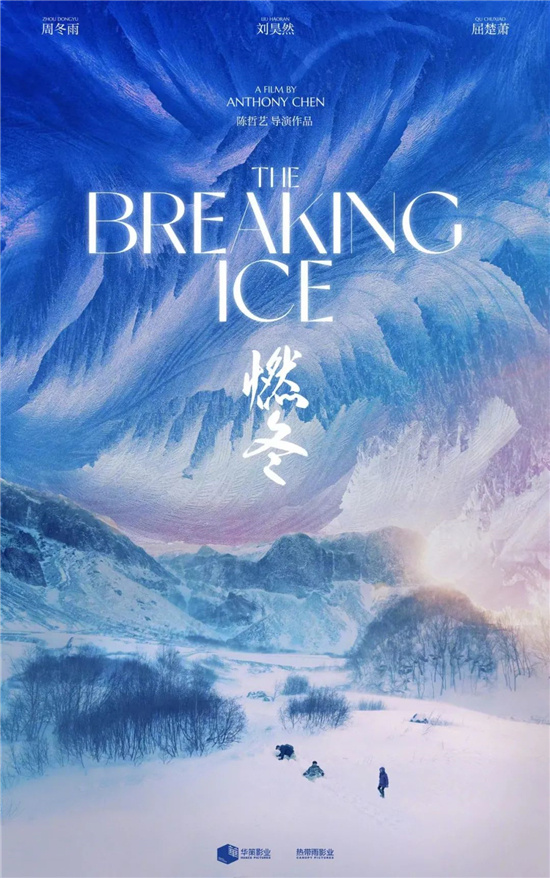

Burning winter

Director:

Starring Zhou Dongyu//

Haven’t met, Zhou Dongyu, Haoran Liu has been in the second set. Judging from the plot outline, this film seems to want to focus on the three protagonists and tell the story in the relationship between the characters rather than setting an obvious plot in the drama conflict.

It seems that in order to better echo the title of the film "Burning Winter", the film was shot in the extremely cold weather in Northeast China. What kind of story between a girl and two boys can ignite the snowy world? In addition, we are also looking forward to seeing three young actors born in 1990s collide with different sparks under the lens of Anthony Chen.

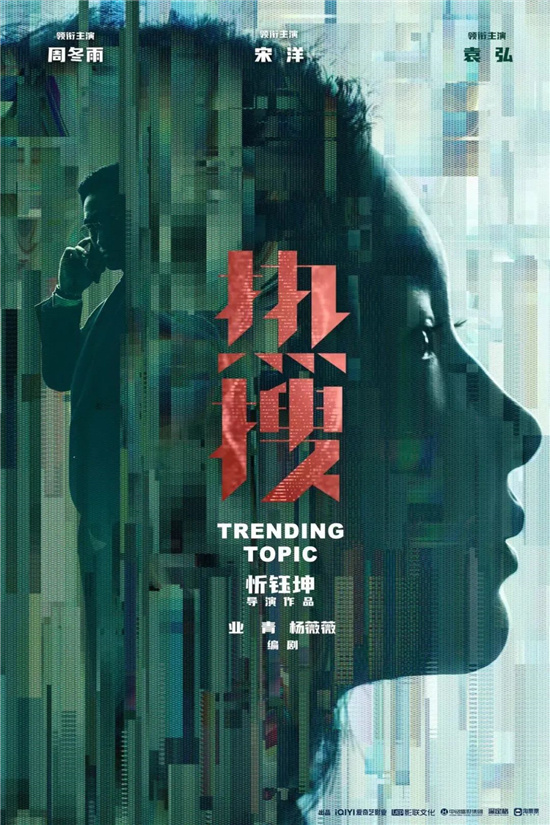

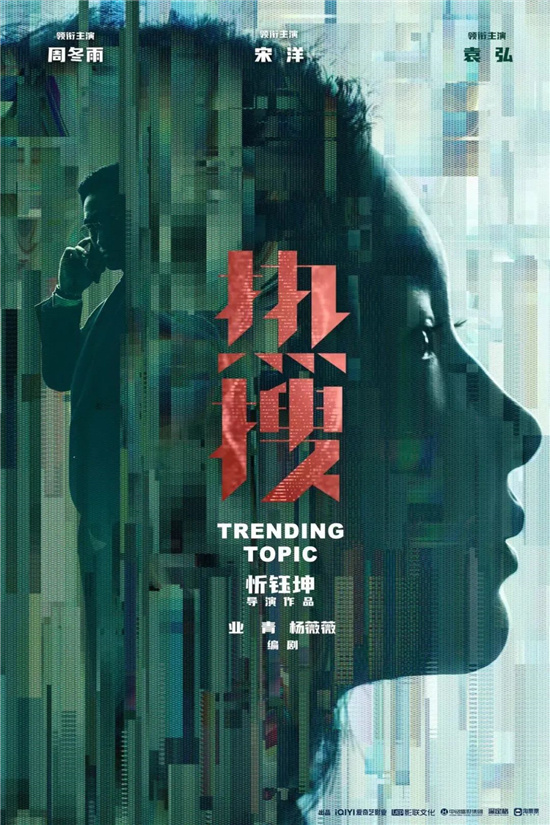

Director:

Starring Zhou Dongyu//

Director Xin Yukun’s new film, released four years after Violent Silence, has assembled Zhou Dongyu, Song Yang and Justin. Different from the types of criminal suspense in previous works, Hot Search is closer to hot spots from the title, aiming at the most complicated and chaotic online public opinion field at present.

In the film, Zhou Dongyu will play an editor-in-chief of online media, and his explosive articles unexpectedly involve the roles played by Song Yang, Justin and others. Internet hot topics have attracted more and more attention from film creators in recent two years. I wonder what new ideas Xin Yukun can shoot this time.

Transparent heroes

Director:

Starring:/Wang Hao

In the past two years, a group of new generation comedians have emerged in different dimensions. Shi Ce and Wang Hao are not only outstanding among them, but also attracted a group of CP fans because of the atmosphere of "Hao Shi Shuang Shuang". ZhangDiSha chose these two new actors for his new film, which is also a challenge for him to direct the tube later.

Compared with the last big IP blessing, "Transparent Heroes" relies more on the pleasing script. Although it is inconceivable to add a fantastic love story, it has a dramatic fit with two actors who are often active on the stage. Looking forward to their cross-border success this time.

Forget about each other

Director:

Starring: Liu Haocun/

Unlike the director’s first film, Liu Yulin’s new film is still an adaptation of a novel, but the style of painting has come to the sweet theme of campus youth. Adapted from "I am only one you from the world", it tells the story that the hero and heroine meet and fall in love at the best age and finally forget each other.

The film stars Liu Haocun and Song Weilong, and the combination of quasi-post-00 CP first ensures the "green feeling" of the campus. It is not difficult to see that Liu Haocun is very suitable for this kind of pure and sweet heroine in A Little Red Flower’s Four Seas before, and it is understood that Song Weilong will change the "cold" impression left by his previous works in this film and become a "secondary two teenager".

Countdown love you

Director:

Starring:/

At the end of the year, "Light Me Up to Warm You" made Chen Feiyu not only "Arthur" in people’s minds, but also a "bully" little brother who was enough for Sue in love scenes. In the middle school, he will partner with another 95-year-old Xiaohua Zhou Ye, continue to interpret the fateful encounter, and then regret the love story that he missed and went in both directions.

The combination of handsome guys and beautiful women will never go wrong in such a plot setting. I hope Chen Feiyu and Zhou can also have enough CP sense to add points to the sweetness of the film.

Between trees and grass

Director:

Starring: Leo/

Directed by Gu Xiaogang, it is the second work in his "Landscape Map" series. The film tells the story of the redemption of a mother and son in Chashan, West Lake. Leo and Angel play the roles of the mother and son, and interpret the legend of "Mulian Saving Mother".

Compared with the grass-roots team of Chunjiang Plumbing, The Grass and Trees not only invited two star professional actors, but also became an artistic director, and the lineup before and after the stage was upgraded. When Leo meets an art film, what will be different from the previous performance? "Spring River Plumbing" has won wide acclaim at home and abroad, and "Between Plants and Trees" is also expected by fans.

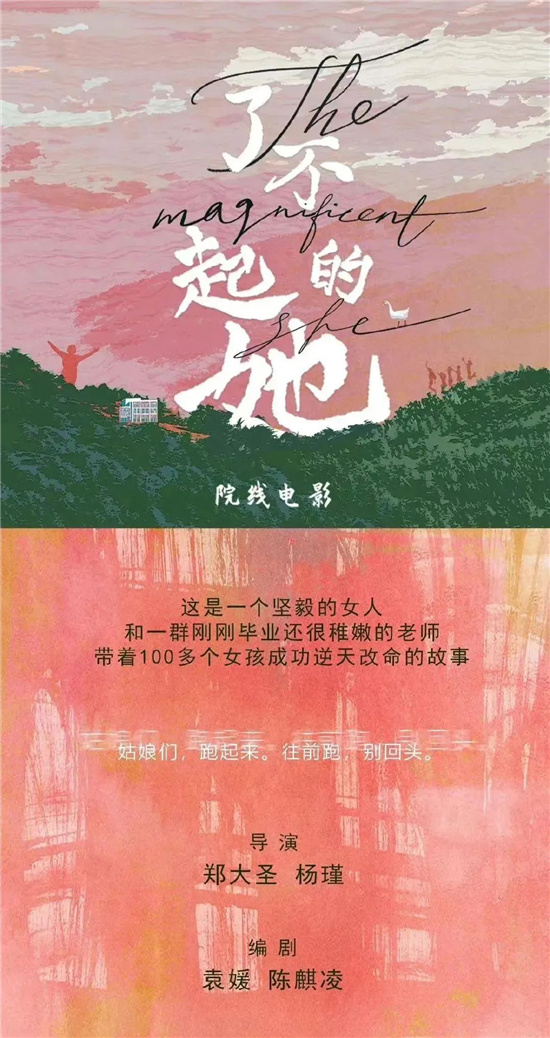

The Great She

Director:,

President Zhang Guimei helped countless girls walk out of the mountains and changed their lives. And her persistence continues to influence and encourage more women. Nowadays, more and more artistic creations have begun to focus on this "burning lamp principal": after the stage play and TV series, the film "The Great She" is also determined to adapt the true story of Zhang Guimei to pay tribute to this model of the times.

More than biographies, The Great She directed by Dasheng Zheng and Yang Jin hopes to find the answer to help more than 100 girls "change their lives" from this determined woman. As written in the exposed materials: "Girls, run forward".

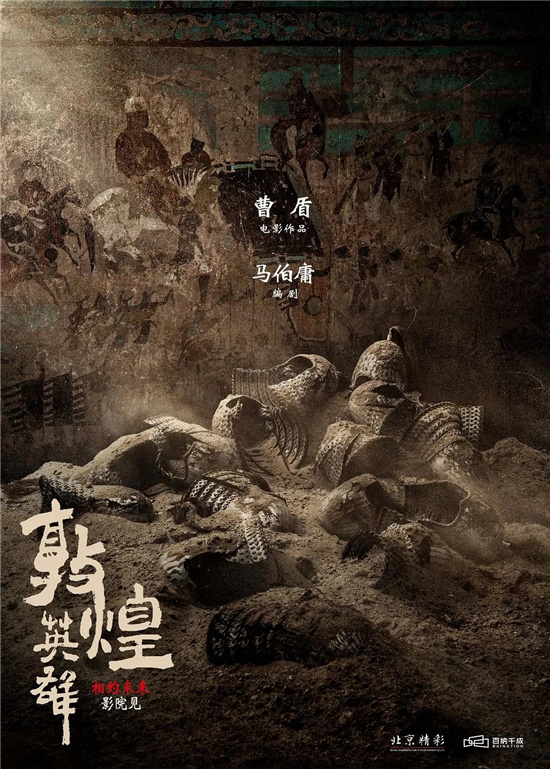

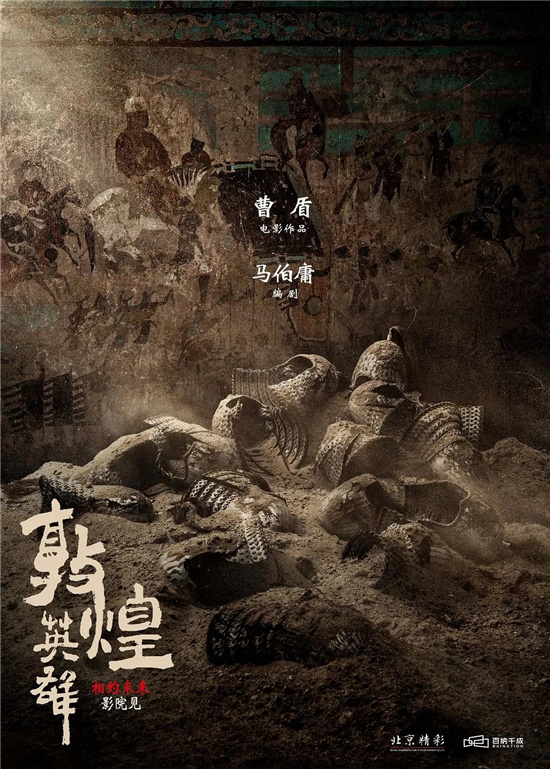

Heroes of Dunhuang

Director:

Screenwriter:

Cao Dun and Ma Boyong collaborated again after The Longest Day In Chang’an, and this film is also the first live-action movie to meet the audience in "China Youth Universe".

The story is based on the history of Shazhou (formerly known as "Shazhou" in Dunhuang) returning to the Tang Dynasty. After the Anshi Rebellion, Shazhou’s soldiers and civilians, led by Zhang Yichao, a Chinese citizen, exhausted all efforts to fight against separatist forces, and finally returned Shazhou to legend of mir in the Tang Dynasty, expecting to present a wonderful and beautiful historical action drama of ancient costumes to the audience.

Basketball champion

Director: Gao Hu

Starring: Wei Xiang/Wang Zhi/

After a great success, Wei Xiang ushered in his own male-dominated work. This time, he is no longer a sad football coach, but wants to win the Basketball Champion.

The film tells the story that the basketball coach played by Wei Xiang was forced to teach in a school with mental retardation, leading a group of players with "negative ability value". He has always pursued "playing ball is to win" and must overcome the "chicken and duck talk" teaching that makes him collapse. There are also Allen, Wang Zhi and others in the lineup cooperating with Wei Xiang. I wonder if Allen will have a more impressive performance than "Silly Spring" this time?

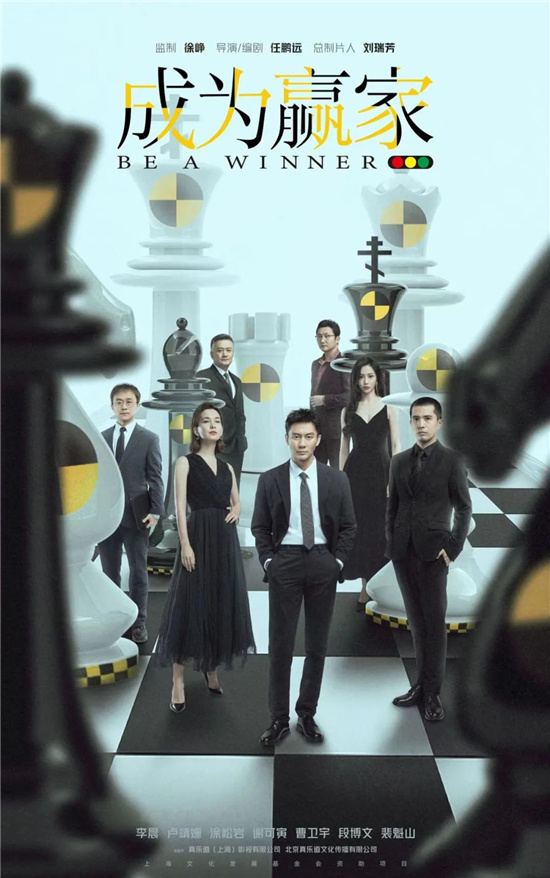

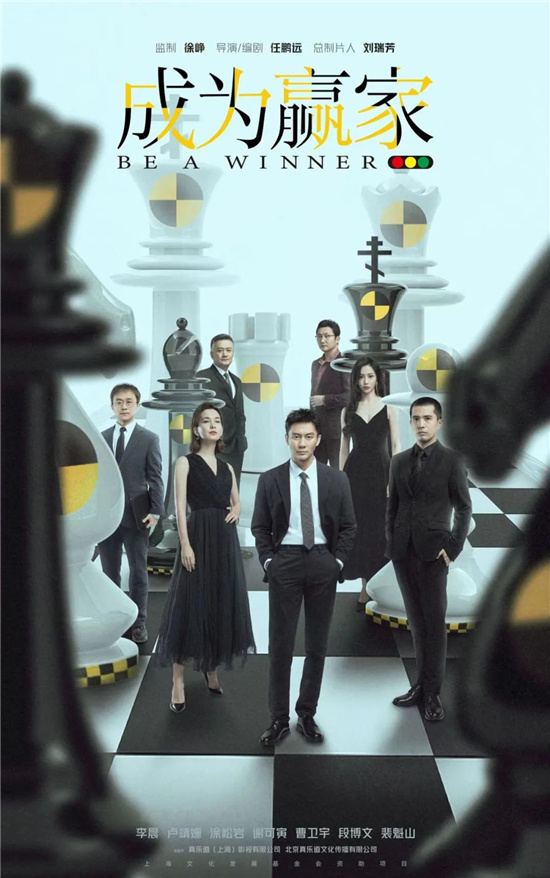

Become a winner

Director:

Starring:/

It is not easy to shoot a "commercial war" with the length of the film, so I decided to challenge this difficult problem.

The film, played by Jerry Lee, the producer, is about to have a wonderful confrontation with commercial predators. It is understood that the director chose a novel perspective for the film, which will show the unknown story behind the "counterattack" of the little people. I look forward to seeing the "wits and wits" workplace story that the two sides are not suspended.

Nightlife in Changsha

Director: Zhang Ji

Starring: YIN FANG/Zhang Jingyi

Nightlife in Changsha is the first film that focuses on Changsha people and shows the urban customs of Changsha. As a producer, director and screenwriter, Zhang Ji once wrote Leap’s Dear, which is his first transformation as a director. The film connects the scenes of contemporary urban nightlife with multiple stories that happened in one night.

Back to Tibet

Director:/

Starring: Song Yang/

At the Beijing International Film Festival, the premiere attracted the attention of many fans, and the film was once hard to get a ticket. The plot of "Back to Tibet" revolves around Lao Kong, who worked in Tibet, and Jiumei, a Tibetan translator. The film not only depicts the development of the relationship between the two people in detail, but also shows the cultural collision between the Han and Tibetan peoples and a large-scale record of the scenery. It also shows the romance of the Tibetan scenery and the fireworks of Tibetan life with the lens.

Many viewers commented that Back to Tibet was romantic and poetic after watching the movie. Those who missed the film festival may wish to enter the cinema after the film was released and feel the shocking beauty of this vast land.

My friend Andre

Director:

What is the experience of filming a work called My Friend Andre with "My Friend"? Dong Zijian must have been deeply touched. As friends for many years, he and Haoran Liu are familiar with each other. The tacit understanding and emotion have been put in place, and the test that Dong Zijian has to face is only how to shoot the best state as a director.

For Xiao Dong, who has always felt like a "literary youth", "Andre" is a very good creative opportunity, and I also hope that the Chinese opera brothers can upgrade their screen gold partners from the best bad friends in the circle with this film.

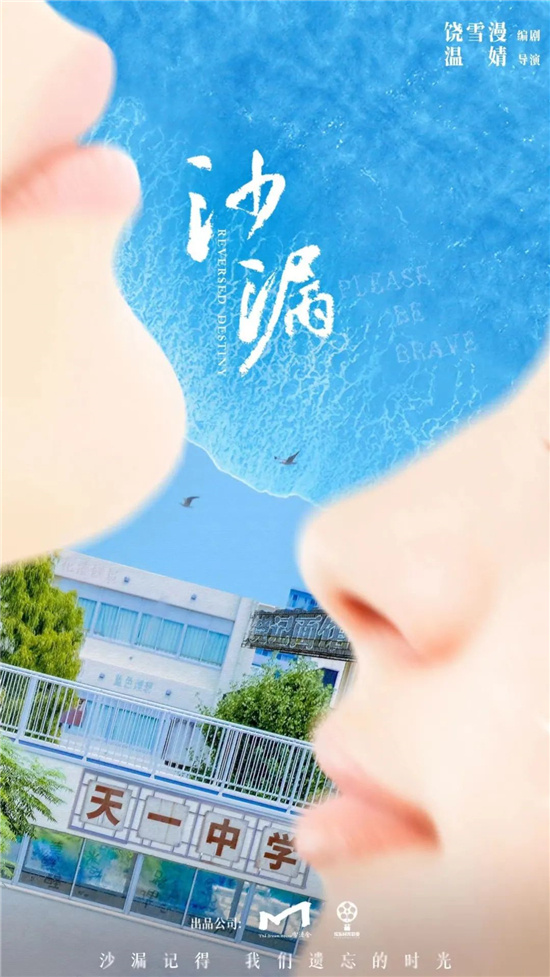

Hourglass

Director: Wen Jing

Screenwriter:

As one of the ancestors of youth pain literature, film adaptation gives people a strong sense of "Renaissance". The film tells the story of a girl who doesn’t wake up and suffers from mental illness because of her mother’s unexpected death. However, it was many years later that she unexpectedly discovered that it was her friend who accompanied her out of the haze who "killed" her mother.

In many post-80s and post-90s minds, Rao Xueman’s youth novels have become a part of memory. This time, she also participated deeply as the main creator in the creation of The Hourglass, but when the context comes to 2023, can the adaptation of these works attract the attention of the mainstream audience after 00 or even 05?

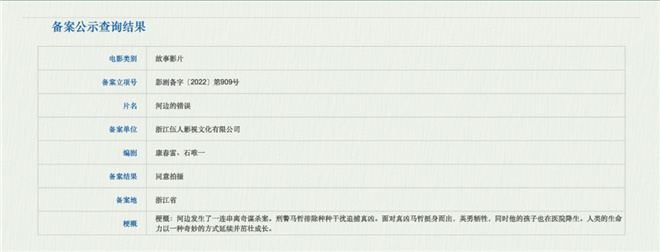

Mistakes by the river

Director:

Director Wei Shujun is a new generation of directors who have high hopes. There is an inventory that has not been released, and another "White Crane with Bright Wings" is also under production. Now he is shooting a new film, which is adapted from Yu Hua’s novel.

According to the project record, the film tells that "a series of bizarre murders happened by the river. Interpol Ma Zhe eliminated all kinds of interference to hunt down the real murderer. In the face of the real murderer, Ma Zhe stood up and died heroically. At the same time, his child was born in the hospital. The vitality of mankind continues and thrives in a wonderful way. "

Compared with the original, the content of the story may be greatly revised, but the background color of Yu Hua’s novels will surely give the film the power to torture human nature and reflect on reality. It is said that the starring actor is an actor with high popularity and attention, waiting for the official announcement of the film.

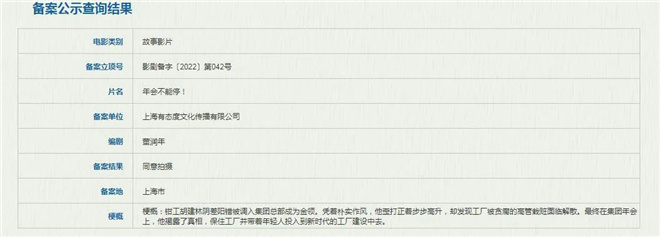

The annual meeting can’t stop! 》

Director/screenwriter:

Run Nian Dong, the screenwriter of "mr. six", will once again write and direct a new film.

According to the project filing, the film tells that "fitter Hu Jianlin was transferred to the group headquarters by mistake and became a gold collar." With his simple style, he was promoted step by step, only to find that the factory was planted by corrupt executives and was facing dissolution. Finally, at the annual meeting of the group, he revealed the truth, kept the factory and took young people into the factory construction in the new era. " Surely it will be an absurd comedy with realistic themes, and the cast of the film is to be announced by the film side.