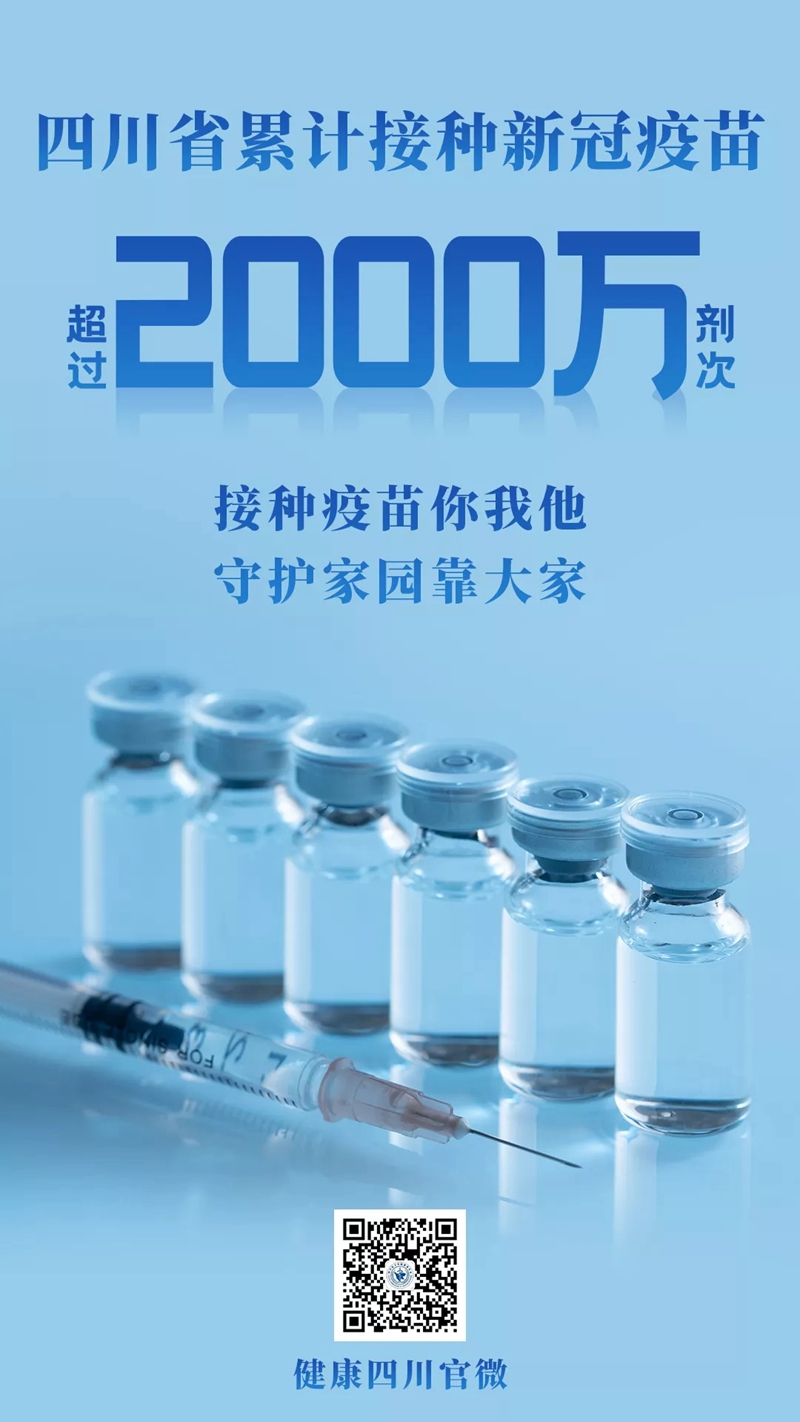

COVID-19 vaccination in Sichuan exceeded 20 million doses.

By 9: 00 on May 24th, COVID-19 vaccine had been inoculated in Sichuan for 20 million times. Since May 17th, the average daily inoculation amount in the province has exceeded 1 million doses, of which the single-day inoculation amount exceeded 2.1 million doses on May 22nd, ranking first in the country.

Behind the data, it is inseparable from strong supervision and deployment, as well as the extraordinary efforts of medical staff in the province’s health and health system.

Since the new crown vaccination work was carried out, Sichuan has highlighted fine management and precise docking, so as to achieve "seedling-to-person, person-to-seedling matching". At the same time, under the strong impetus of emergency headquarters Province, the capacity of immunization planning information system was improved, Covid-19 vaccination publicity and public opinion monitoring plan were formulated, and the social atmosphere of active vaccination of COVID-19 was created, and the vaccination work in the province was carried out in an orderly manner.

Three forces grasp supervision  Cities (States) have strong deployment.

How to ensure efficient and rapid vaccination of vaccines, making scientific and reasonable policies is the most important guarantee. Party committees and governments at all levels in Sichuan Province attach great importance to the vaccination work in COVID-19, actively promote this work as a major political task, and earnestly implement it, forming "three forces to pay attention to supervision" (provincial party inspection team, provincial government supervision office, provincial health and wellness Committee), "four mechanisms to vaccinate" (government-led, social governance to find people, health insurance injections, departmental guarantee) and "five teams to implement it" (one county-level leader and one public security team)

At the same time, all kinds of resources will be coordinated, and the information system will be upgraded and optimized to support the large-scale vaccination work in COVID-19. In conjunction with provincial big data centers and information system manufacturers, we have established special classes for more than 170 people, established five working systems, including daily scheduling, special work report, operation monitoring, system upgrade and emergency response, adhered to daily consultation and judgment, and strengthened the overall coordination of departments.

The reporter also learned that the Provincial Medical Insurance Bureau and the Department of Finance have paid 3.6 billion yuan to pay for vaccines; The provincial medical insurance bureau and the provincial health and wellness commission have also jointly formulated a policy on vaccination costs. The main leaders of governments at all levels have signed the target responsibility letters at different levels to compact their responsibilities and vigorously promote the implementation of the work.

The formulation of various policies and the implementation of safeguards have ensured the smooth progress of vaccination work. "There is not enough space in our community, and the sub-district office immediately coordinated the venue in the jurisdiction, which led to the temporary inoculation point at the entrance of the camp." Guo Shuiyuan, deputy director of the Yingmenkou Community Health Service Center in jinniu district, Chengdu, said in an interview that the temporary inoculation point is located at No.13 Changting Road. Previously, the location of the inoculation point and the knowledge of popular science have been widely publicized to the residents in the jurisdiction by distributing leaflets, posting posters and banners, and going home. "The temporary inoculation point was set up in mid-April, and we have 10 inoculation stations with two shifts. From the evening of April 25 to May 22, 24,000 doses have been inoculated. "

Municipalities (states) also adjust measures to local conditions to ensure the effective implementation of vaccination work, and Mianyang Municipal Government has included the vaccination work of the new crown in the government target assessment; Under the financial constraints, Luding County and Kangding Municipal Government of Ganzi Prefecture invested more than 1.5 million yuan and 4.2 million yuan respectively for the allocation of relevant facilities and equipment needed for vaccination work in COVID-19.

There are 140 COVID-19 vaccination sites in Luzhou City, and 118 of them have supplied vaccines and carried out vaccination. As of 15: 00 on May 21st, the cumulative vaccination of COVID-19 vaccine in Luzhou City exceeded 1 million doses. COVID-19 vaccine circulation vaccination and other related information has been fully incorporated into the management of the immunization planning information system, so that the source and destination of vaccines can be traced, and the vaccination work can be carried out safely and efficiently.

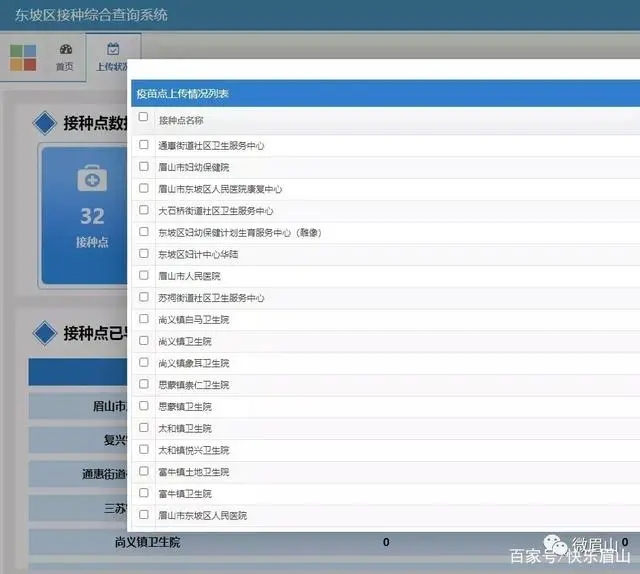

In Meishan City, the comprehensive query system of vaccination has played an important role. Through this system, people who have not been vaccinated, people who have been vaccinated with the first and second injections, the vaccination time and interval can be accurately grasped, and the list of people to be vaccinated can be derived in time, and the towns (streets) can be fed back with the OA system to organize personnel vaccination accurately. At the same time, 47 4G wireless routers are installed in each fixed inoculation point and mobile special class to ensure the inoculation speed.

Chaotian District of Guangyuan City makes full use of the combination of new media and traditional media to continuously carry out the publicity of Covid-19 vaccination, so as to achieve full coverage of urban agricultural areas. In the early stage, we organized and carried out the skills training for handling abnormal reactions in large-scale vaccination in Covid-19 for many times, and ensured the personnel, materials, equipment, vehicles and so on.

In Enyang District of Bazhong City, according to the principles of "eight possessions", "four divisions" (medical staff, medicines, equipment, ambulances, venues, technical support, access and green referral channels) and "four divisions" (waiting area, inoculation area, observation area and suspected abnormal reaction treatment area), three large-scale inoculation points and 37 routine inoculation points were set up.

Mianning County, Liangshan Prefecture established a leading group headed by the deputy secretary of the county party committee and the county magistrate. The county government and each township (street) signed a letter of responsibility. The leaders of the "Five Ones" Lianxiang County took the lead in vaccination and personally urged them to ensure that each round of vaccination tasks quickly and efficiently realized "inventory clearing". In ningnan county, cadres at the county, town, village and group levels closely combine vaccination with forest and grassland fire prevention and resettlement, mobilize vaccination in villages and households, and do everything possible to dispel people’s concerns about vaccination and improve their willingness to vaccinate.

Standardize vaccination service  Let the masses feel at ease to vaccinate.

"What’s your name? Please check the name of the vaccination. " At 3pm on May 20th, a nurse patiently explained the name and function of the vaccine to the vaccinators at COVID-19 vaccination point in Shahe Street, Jinjiang District, Chengdu. After checking the information, she successfully vaccinated the residents in this area with COVID-19 vaccine. The young man surnamed Zhou, who just finished COVID-19 vaccination, said: "When the family doctor in the community went to our community for a free clinic, I realized that I had to get vaccinated as soon as possible, so I made an appointment yesterday and came to the queue early this morning. I waited for more than 40 minutes, and the vaccination process was also very fast."

Standardized vaccination service is a powerful starting point to ensure the "Sichuan speed" of vaccination. According to the characteristics of vaccination workflow and mass vaccination, Sichuan draws on the experience of advanced provinces, expands user access, optimizes vaccination process, establishes system operation monitoring index system, sets up yellow and red warning thresholds for real-time monitoring, timely eliminates system operation risks, establishes four-level information system emergency state, and formulates corresponding disposal processes to ensure the stable operation of the system.

It is understood that a total of 4019 inoculation points have been set up in Sichuan Province. The layout of the 12 inoculation sites visited by the resident working group is standardized and the process is reasonable, and governments at all levels have conducted strict training for inoculation personnel. Inoculation personnel strictly implement the requirements of "three checks and seven pairs" in accordance with the operating rules and provide services according to law. Each inoculation point is equipped with perfect medical treatment drugs, instruments and medical staff to ensure that each inoculation point is responsible for the treatment of dimethyl ether or above. More than 1,200 emergency drills were conducted, and four high-level general hospitals, including Huaxi Hospital, were designated as provincial-level designated treatment hospitals.

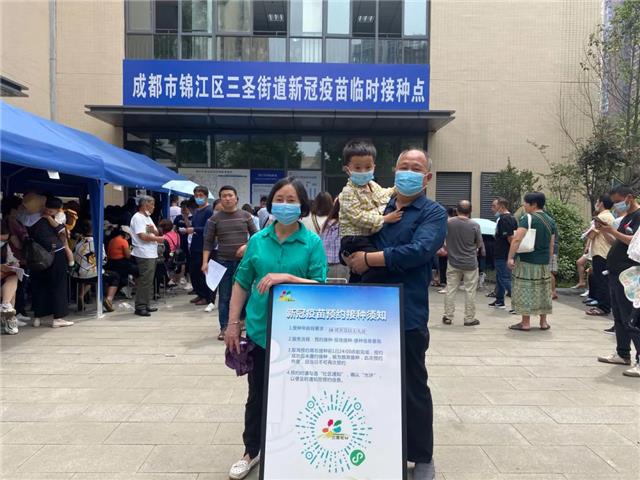

At 7: 30 in the morning of May 20th, there was a long queue in front of the temporary inoculation point of COVID-19 vaccine in Sansheng Street, Jinjiang District, Chengdu. The reporter saw at the scene that the temporary inoculation point carried out appointment registration, health screening, blood pressure measurement, pre-inspection registration and notification, inoculation, observation and voucher-making, and the whole process of inoculation formed a strict closed-loop process. The doctor carefully inquired about the medical history and allergic history of the vaccinated people, measured their blood pressure for each vaccinated people, and screened out those who were not suitable for vaccination. All links are informed in detail, and every detail of vaccination is carefully arranged and interlocking. Volunteers are guided to queue up in all areas, carefully explaining and patiently answering questions from the masses. The street implements special personnel to help maintain order and coordinate communication at the scene, ensuring that qualified people should "plant all kinds" and the inoculation site is orderly.

On the afternoon of May 21st, the slogan of inoculation point was hung at the entrance of Neijiang Urban Planning Exhibition Hall next to wanda plaza, Dongxing District, Neijiang City, and there was a fence at the entrance to regulate the queuing order. The interior of the first floor has been divided into pre-inspection and triage area, registration area and inoculation area. There are complete office desks, chairs, computers and signs, and staff are shuttling through them to prepare materials and debug equipment. At the back of the hall, there is also a observation area and an abnormal reaction disposal area.

According to Li Li, director of Xilin Community Health Service Center, who is in charge of the preparation of the inoculation site, the staff began to arrange at the scene three days ago. At present, all the work such as personnel, materials and power supply is ready. There are 8 registration points and 5 inoculation tables in the inoculation point, and the preset inoculation capacity is about 2500 doses per day.

"Convenience and peace of mind" is the most popular comment among the vaccinated citizens. Ms. Zhang, who works in Sansheng Street, Chengdu, signed up for COVID-19 vaccination on the same day. She said: "Vaccination is a kind of health guarantee for herself, her colleagues and her family. Although there are many people, we all understand. The medical staff is very hard, and all links make people feel at ease. "

In the Nanhu Community Health Service Center in Zigong High-tech Zone, Xiong Heyu, a citizen after vaccination, said that their whole family had been vaccinated, and the vaccination process was very smooth, without any discomfort, and their mood was very relaxed. After vaccination, there is an extra security guarantee. This benefit is great. I hope everyone will take the initiative to vaccinate.

Many measures to warm the heart  Give warm feelings to the vaccinated people

The vaccination amount in COVID-19, Sichuan Province has reached a new high, and behind this figure, it is inseparable from the understanding and support of all the vaccinated people and the humanized service of all the staff in the vaccination process.

Placing green plants, playing TV series to ease the tension, providing convenience for caring channels, caring for haircuts to serve the masses, and cooling down in the summer with traditional Chinese medicine decoction … In order to make the vaccinated people feel more warm and convenient, inoculation points in the province have also come up with "strange tricks".

At 11: 00 am on May 20th, the reporter arrived at the temporary centralized inoculation point of COVID-19 vaccine in Yongquan Street, Wenjiang District, Chengdu. The lobby on the first floor of the inoculation point was specially set up with stools, drinking fountains, paper cups and conventional medicines, and some residents rested and waited here. According to Gao Qin, director of the office of Yongquan Street Community Health Service Center, residents who queue for a long time will be arranged to rest in the waiting area, waiting for the staff to "call the number", and the residents who are called to the number range will go to the queue again. At the inoculation point on the second floor, there are more than 60 pots of lush green plants as partition areas, and green plants can alleviate the tension of the inoculated people.

In some temporary inoculation sites for large-scale people in Chaotian District of Guangyuan City, volunteers of "Little Red Riding Hood" and "Red armband" traveled through various areas to provide guidance services in an orderly manner. Some inoculation sites also relieve people’s inoculation mood by placing books and periodicals, newspapers, and playing film and television dramas, and strive to create a comfortable and pleasant inoculation environment for cadres and people in their jurisdiction.

"The government is very considerate and thoughtful. During the gap period of waiting for vaccination, you can check your blood pressure and get a haircut. It is simply a multi-purpose …" On May 18, at the Covid-19 vaccination site in Baixi District, Xuzhou District, Yibin City, people came to vaccinate in an endless stream. Outside the inoculation point, voluntary services such as "love clinic" and "love haircut" are lined up in turn, providing all kinds of services free of charge for people who come to vaccinate. "We issue a queuing number plate to everyone who comes to get vaccinated. You can go to the volunteer service point to receive voluntary services such as free clinics and scissors while waiting for vaccination or after vaccination." Volunteers who distributed queuing number plates before the inoculation point said.

In addition to vaccinating the public’s emotions and feelings of experience, even the "weather" has become the object of concern.

It was early summer in May, and Chinese medicine practitioners in Suining gave full play to the unique advantages of Chinese medicine in prevention and health care, heatstroke prevention and cooling, and helped the vaccination work in COVID-19 smoothly. It is understood that in order to effectively prevent and control the occurrence of heatstroke among the vaccinated people caused by high temperature weather in summer and reduce the damage caused by high temperature weather to the health of the vaccinated people, Suining Chinese medicine practitioners put service and protection of people’s health into the important agenda of vaccination work, and set up Chinese medicine distribution points at temporary vaccination points of various vaccines, and distributed Chinese medicine decoction for heatstroke prevention and cooling to the vaccinated people free of charge. As of May 20th, the city’s TCM medical institutions have set up 7 distribution points of TCM decoction, and provided more than 30,000 people with TCM heatstroke prevention and cooling decoction free of charge.

Overtime delay service  Don’t let the vaccinated people run away in vain

To build an immune barrier as soon as possible, medical personnel have become the "main force" of vaccination work in COVID-19. It is reported that the first dose of 28.81 million people will be vaccinated in Sichuan Province before June 9, and the second dose of 28.302 million people will be vaccinated before June 30.

The reporter learned that in order to ensure the rapid and steady progress of vaccination in COVID-19, many cities (states) have made great efforts to strengthen the staffing of vaccination sites to meet the vaccination needs of the masses.

On May 11th, the first Covid-19 vaccine shelter inoculation point in Leshan was officially put into use. The inoculation point was equipped with 25 information collection stations and 28 vaccination stations, equipped with more than 110 medical staff, and the maximum inoculation amount per day could reach 9000 doses.

Up to now, ziyang has set up 90 inoculation units (temporary inoculation points), 359 inoculation stations and 3,154 professional inoculation personnel.

Ningnan county, Liangshan Prefecture has set 17 fixed inoculation points and 22 temporary inoculation points, equipped with 447 qualified inoculation personnel, and the daily inoculation capacity exceeds 10,000 doses.

Dongpo District of Meishan City has passed the training of COVID-19 Vaccination Certificate, and has reserved 1,100 COVID-19 Vaccination Personnel.

Since the large-scale vaccination work was carried out in the whole province on May 17th, many vaccination sites in COVID-19 have adjusted their service hours, and adopted the delayed service of working overtime at noon to prevent people from running away in vain.

On May 17th, in the inoculation point of Neijiang Second People’s Hospital, in order not to disappoint the queuing people, the hospital also temporarily deployed vaccines from other points and worked overtime until 11 o’clock in the middle of the night.

On May 20th, Yang Wanting, a public health doctor of Yongquan Community Health Service Center, worked with her colleagues at the temporary centralized vaccination site of COVID-19 vaccine in Yongquan Street, Wenjiang District, Chengdu for nearly a month. She said that from May 1st to recent days, with the arrival of vaccines in batches, the vaccination site also ushered in a "peak". On May 19th, in order to complete the vaccination task of 3,000 people in time, she and her colleagues have been working from 8: 00 in the morning.

Similarly, at the Deyang CDC, it was already busy at 4 am, and the staff began to distribute vaccines to ensure the continuous progress of vaccination in COVID-19 …